AI and Human Resources: How CIOs Ensure Auditability

It is 2026. The novelty of generative AI has faded, replaced by the operational reality of “Agentic AI.” In your enterprise, AI and Human Resources agents are no longer just drafting emails; they are executing workflows across back-office operations. They are provisioning software licenses, updating payroll tax brackets, and validating time-off requests in AI-driven HR systems against complex local labor laws.

This shift from “passive assistance” to “autonomous action” brings a new, critical challenge for the technology office: Auditability.

When a human HR manager denies a leave request, there is a paper trail emails, notes in the HRIS, and a defined policy reference. But when an AI agent makes that same decision, where is the trail? If an employee files a complaint alleging bias or wrongful denial, can you reconstruct exactly why the AI made that choice? Or will you be forced to point at a “black box” and shrug?

For the CIO, AI and Human Resources is no longer just an efficiency conversation; it is a governance conversation. Implementing “Auditability by Design” is the only way to scale autonomous systems without scaling legal and operational risk. Here is how to architect transparency into your intelligent back office.

The Black Box Challenge in AI and HR Compliance

The fundamental risk in modern AI and HR deployments is the “probabilistic” nature of the technology. Unlike traditional deterministic software (if X, then Y), Large Language Models (LLMs) reason through probability. This means they can occasionally arrive at the correct answer for the wrong reasons or the wrong answer with high confidence.

In a high-stakes enterprise environment, “it usually works” is not an acceptable standard for compliance.

Imagine an autonomous agent that handles expense reimbursements. It rejects a travel expense from a sales director. The director claims the rejection is an error. In a traditional system, you would check the logic code: IF amount > $500 AND receipt_missing = TRUE, THEN reject.

In an AI and Human Resources system, the logic isn’t hard-coded; it is inferred. The agent read the policy, analyzed the receipt image, and made a judgment call. To audit this, you cannot just look at the code. You need a forensic record of the agent’s “chain of thought.” You need to know:

- What policy document did it retrieve? (The Context)

- How did it interpret that policy? (The Reasoning)

- What specific data point in the receipt triggered the rejection? (The Evidence)

Without this granular logging, AI and Human Resources becomes an operational minefield. You are effectively delegating decision rights to a system you cannot supervise.

Implementing Immutable Logging for AI in Human Resources

To solve this, we must move beyond standard server logs. We need a new architecture for “Decision Logging.” This is the core of Artificial Intelligence in HR governance.

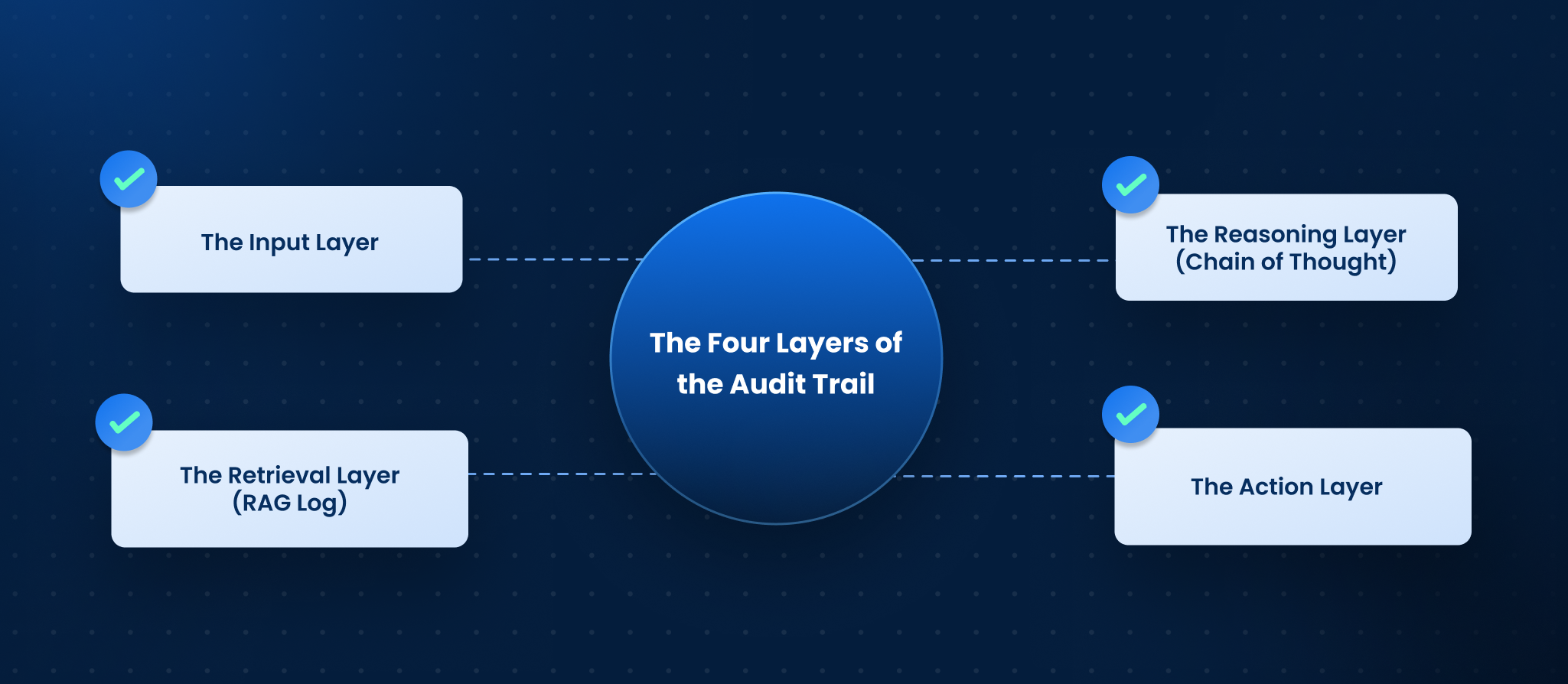

1. The Four Layers of the Audit Trail

A robust audit system for AI and Human Resources must capture four distinct layers of every interaction:

- The Input Layer: The raw prompt from the user, including metadata like location, role, and timestamp.

- The Retrieval Layer (RAG Log): A specific record of which knowledge base chunks were fed to the model. If the AI cited the “2024 Remote Work Policy” instead of the “2026 Hybrid Work Policy,” this log will catch it immediately.

- The Reasoning Layer (Chain of Thought): This is the most critical new component. You must configure your AI and Human Resources agents to output their internal reasoning steps before generating the final response. This “monologue” explains the logic: “I see the user is in California. California law requires specific overtime calculation. I will apply Rule B.”

- The Action Layer: The API call made to the backend system (e.g., Workday or SAP). This proves what the agent actually did (e.g., “Updated Field 45 to ‘Denied'”).

2. Immutable Storage and Chain of Custody

For AI for HR to withstand a compliance audit or legal discovery, the logs must be immutable. You cannot store these decision logs in a rewriteable database where an admin could theoretically alter the “Reasoning Layer” after the fact.

Best practice in 2026 involves writing these decision traces to a WORM (Write Once, Read Many) storage tier. This ensures a pristine chain of custody. When your legal team asks why a specific termination workflow was triggered, you can produce a cryptographically verifiable record of the AI’s decision path.

From Black Box to Glass Box: AI and HR Transparency

The goal of Auditability by Design is to turn the “Black Box” into a “Glass Box.” This transparency is essential not just for legal defense, but for operational trust.

Visualizing the Decision Matrix

Raw JSON logs are useful for engineers, but they are useless for HR Business Partners. To truly operationalize AI and Human Resources transparency, you need a “Human-in-the-Loop” dashboard.

This dashboard should visualize the decision. If an employee asks, “Why was my paternity leave calculation 12 days instead of 15?”, the Tier 2 support agent should be able to pull up the interaction and see a highlighted view:

- Input: “Apply for paternity leave.”

- Policy Found: “US Employee Handbook, Section 4.2.”

- Variable Identified: “Tenure < 1 Year.”

- Logic Applied: “Employees with less than 1 year tenure are capped at 12 days.”

- Outcome: 12 days approved.

This transforms AI and Human Resources from a source of confusion into a source of clarity. It allows human operators to validate or correct the machine’s logic instantly.

Preventing Hallucinations in AI and HR Decision Logs

One of the distinct risks of AI and Human Resources is hallucination where the model invents a policy that doesn’t exist.

An auditable system catches this. By cross-referencing the “Reasoning Layer” with the “Retrieval Layer,” you can automatically flag instances where the AI claims to be enforcing a policy that isn’t present in the retrieved documents. Automated auditors can run nightly batch jobs, scanning yesterday’s thousands of ticket resolutions to identify any AI HR decisions that lack a solid citation link.

Operationalizing Auditability in AI and Human Resources

For the technology leader, the question is: how do we implement this without crushing system performance?

Defining the “Explainability Threshold”

Not every interaction requires a forensic audit. If an agent answers “What is the Wi-Fi password?”, you do not need a deep reasoning log.

You must define an “Explainability Threshold” for your AI and Human Resources architecture.

- Low Risk (No Audit): FAQs, navigational help, general inquiries.

- Medium Risk (Standard Log): Password resets, software provisioning, document requests.

- High Risk (Deep Audit): Compensation changes, leave approvals, benefits enrollment, access control changes.

For High Risk interactions, the system should force a “Chain of Thought” generation step, even if it adds 2 seconds of latency to the response. In AI and Human Resources, accuracy and auditability always trump speed.

Integrating AI and Human Resources Logs with Enterprise SIEM

Your AI and Human Resources logs shouldn’t live on an island. They need to feed into your broader enterprise security posture. Integrating these decision logs with platforms like Splunk or Datadog allows your InfoSec team to detect patterns.

For example, if you see a sudden spike in the AI granting “Admin Access” across multiple HR accounts, your SIEM can trigger an alert. Is it a policy change? Or is it a prompt injection attack manipulating the AI and HR agent? You can only know if you are logging the decision logic, not just the outcome.

Leena AI: Built for the Auditable Enterprise

At Leena AI, we understand that for the Fortune 500, “cool” technology is irrelevant if it isn’t “safe” technology. That is why we built our AI and Human Resources platform with an Traceability First architecture.

We don’t just give you an AI agent; we give you the flight recorder.

- The Explainability Engine: Every action taken by a Leena AI agent whether it’s resetting a password or processing a leave request is accompanied by a natural language explanation of why it took that action.

- Citation Enforcement: Our proprietary WorkLM model is architected to refuse to answer if it cannot cite a specific source from your uploaded knowledge base. It links every answer to a paragraph in your policy docs.

- The “Audit Mode” Dashboard: Your admins can replay any interaction to see exactly what the AI saw. You see the prompt, the retrieved context, the reasoning steps, and the final output side-by-side.

We believe that AI and Human Resources requires a partnership between human oversight and machine speed. Our platform is designed to keep you in control, ensuring that every autonomous decision is defensible, transparent, and aligned with your governance standards.

Frequently Asked Questions

Does enabling detailed audit logs slow down the AI and Human Resources system?

For the user, the difference is negligible (milliseconds). The “Reasoning” or “Chain of Thought” process happens alongside the response generation. The heavy lifting of storing and indexing these logs happens asynchronously in the background, ensuring the employee experience remains snappy.

How long should we retain audit logs for AI and Human Resources?

This depends on your industry and local regulations. However, a standard rule of thumb for AI and HR decision logs is to match your document retention policy for human HR decisions typically 3 to 7 years. Since these logs are text-based, the storage cost is relatively low compared to the risk mitigation value.

Can audit logs contain sensitive employee data?

Yes, and this is by design. To reconstruct a decision about a medical leave request, the log must contain the context of the request. Therefore, your AI and Human Resources audit logs must be encrypted at rest and protected by strict Role-Based Access Control (RBAC), accessible only to authorized legal and HR compliance officers.

What happens if the audit log shows the AI made a mistake?

This is the system working as intended. The log allows you to perform a “root cause analysis.” Was the mistake due to a poorly phrased policy document (Data Layer), a misunderstanding of the prompt (Input Layer), or a logic failure (Model Layer)? Once identified, you can fix the underlying issue to prevent recurrence.

Can we audit the AI model itself?

You generally cannot audit the “weights” of a neural network to understand a specific decision. That is why “Decision Logging” (recording the input, retrieval, and output logic) is the industry standard for Artificial Intelligence in HR. You audit the behavior and the process, not the mathematics.

How does this impact the cost of running AI for HR?

Storage is cheap; lawsuits are expensive. While capturing detailed logs adds a marginal cost to your cloud storage bill, it drastically reduces the “Total Cost of Risk.” It transforms undefined liability into managed governance.

Can Leena AI integrate with our existing compliance tools?

Yes. Leena AI is designed to fit into the enterprise ecosystem. Our logs can be exported or streamed to your existing governance, risk, and compliance (GRC) platforms, ensuring that your AI and Human Resources operations are visible within your single pane of glass for enterprise risk.