AI for Companies: Why Liability Gating Matters

It is 2026. Your new autonomous finance agent, powered by the latest high-reasoning model, identifies a vendor invoice that is 45 days overdue. It matches the invoice number to a purchase order, verifies the amount is within the “Auto-Approve” limit of $50,000, and initiates a wire transfer.

Two hours later, your Chief Financial Officer walks into your office. The invoice was a sophisticated deepfake a “business email compromise” attack generated by an adversarial AI. The purchase order match was a hallucination caused by a similar string of numbers in a legacy system. The money is gone.

The agent didn’t “fail” in the traditional sense. It followed its instructions: “Pay overdue invoices under $50k.” It just lacked the judgment to recognize fraud.

This scenario represents the single biggest operational risk of ai for companies in 2026: The “Speed of Execution” problem. We have given software agents the ability to move money, delete data, and terminate access faster than any human can review.

If you are a technology leader, your primary job is no longer just enabling innovation; it is engineering the brakes. We call this architecture “Liability Gating.” It is the practice of hard-coding operational limits that forbid AI agents from executing irreversible transactions, regardless of how confident the model claims to be.

The Illusion of Confidence in AI Companies

When we evaluate ai companies and their tools, we often look at benchmarks: accuracy scores, reasoning capabilities, and speed. But in an enterprise environment, a 99% accuracy rate on a wire transfer workflow means you are losing money 1% of the time. At scale, that is unacceptable.

The fundamental issue is that Large Language Models (LLMs) the engines behind modern ai software are probabilistic. They do not “know” facts; they predict likely continuations. When an agent says, “I am 100% confident this invoice is valid,” it is not expressing a certainty of truth. It is expressing a mathematical probability based on its training data.

For a creative task like drafting a marketing email, a 1% error rate is a typo. For a transactional task like offboarding an employee or deleting a database table, a 1% error rate is a lawsuit.

AI for companies requires a different standard. We cannot rely on the model to police itself. We need an external, deterministic logic layer a “Liability Gate” that sits between the AI’s reasoning engine and your backend systems.

Defining “Irreversible” Actions in AI for Business

To implement Liability Gating, you must first classify every action your ai for business tools can take. You need to separate them into two buckets: Reversible and Irreversible.

Reversible Actions (Low Liability)

These are actions where the cost of a mistake is merely time.

- Drafting an email (but not sending it).

- Resetting a user’s password.

- Scheduling a meeting.

- Querying a database for a report.

If the AI gets these wrong, a human can fix it easily. The “Correction Cost” is low.

Irreversible Actions (High Liability)

These are actions where the cost of a mistake is financial, legal, or reputational damage that cannot be easily undone.

- Financial: Initiating wire transfers, approving expenses, changing vendor bank details.

- Data: Deleting records, archiving compliance logs, purging backup files.

- HR: Terminating employment status, revoking all system access, sending offer letters.

For these actions, ai in business must never have full autonomy. The Liability Gate is a hard-coded rule that says: “Even if the AI is 99.9% sure, it cannot execute this function without a cryptographically signed human approval.”

AI for Companies: Why Liability Gating Matters

How do you build this? It is not about better prompting. It is about infrastructure.

A Liability Gate is a middleware component that works seamlessly with Leena AI Integration Hub to safely intercept AI actions before they reach your systems.

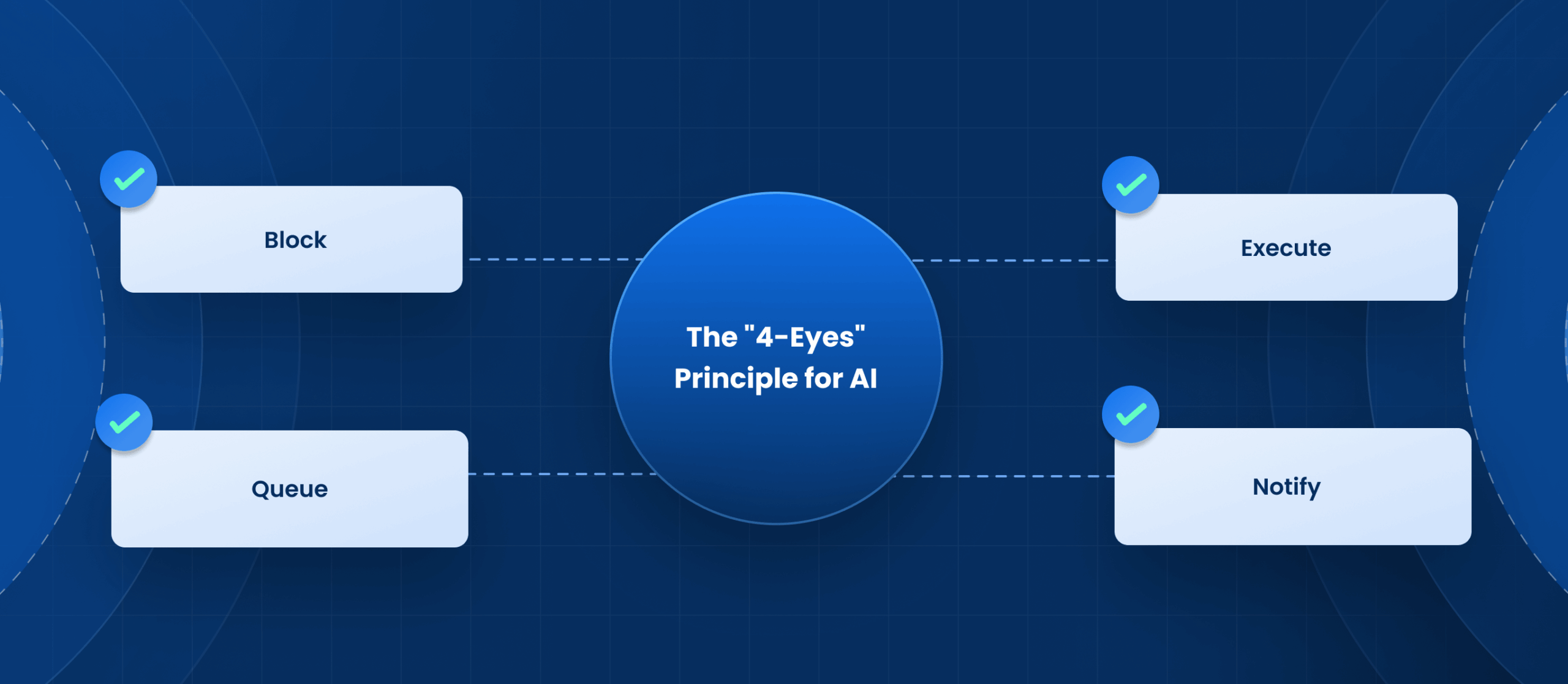

The “4-Eyes” Principle for AI

In banking, the “4-Eyes Principle” requires two people to approve high-value transactions. In ai and business automation, this translates to “One Bot, One Human.”

When an agent attempts an irreversible action (e.g., “Delete User Account”), the Liability Gate intercepts the request.

- Block: The API call is blocked at the network level.

- Queue: The request is placed in a “High-Risk Approval Queue.”

- Notify: A human (e.g., an IT Admin or HR Partner) receives a notification with the full context: “Agent X wants to delete User Y. Reason: Termination date reached. Approve/Deny?”

- Execute: Only after the human clicks “Approve” does the Liability Gate release the API call to the backend.

This adds latency. It might take an hour instead of a millisecond. But for irreversible actions, latency is a feature, not a bug. It prevents the “flash crash” scenario where an AI agent makes thousands of erroneous decisions in seconds.

Evaluating AI Technologies for Safety

As you assess best ai tools for business, you need to ask vendors specifically about their gating architecture.

Many ai technologies are built for speed and demo wow-factor. They want to show you “zero-touch” automation. As a CIO, you need to be the skeptic. You need to ask:

- “Can I configure hard stops for specific API endpoints?”

- “Does your platform support role-based approval workflows for high-risk actions?”

- “Is the approval log immutable for audit purposes?”

If a vendor says their model is “safe enough” to handle wire transfers without human oversight, they do not understand enterprise risk.

The Human-in-the-Loop as a Legal Shield for AI Companies

Liability Gating is not just operational safety; it is legal defense.

In 2026, we are seeing the first waves of regulatory frameworks targeting ai for companies. If an algorithm discriminates against an employee or facilitates financial fraud, the regulators will ask: “Who made the decision?”

If the answer is “the black box,” you are liable for negligence. If the answer is “The AI recommended it, but a qualified human Manager reviewed and approved it,” you have a chain of accountability.

Liability Gating ensures that for every consequential decision, there is a human name attached to the log. It transforms benefits of ai from “reckless speed” to “augmented intelligence.” The AI does the legwork (gathering data, proposing the action), but the human retains the authority.

Leena AI: Safety First Architecture for AI in Companies

At Leena AI, we build our agents with the assumption that enterprise environments are zero-tolerance zones for irreversible errors. We do not ask you to trust our model blindly; we give you the controls to govern it.

Our platform includes a native Action Guardrail system.

- Configurable Gating: You define which actions (e.g., “Update Salary,” “Delete User”) require human approval. This is policy-based, not just prompt-based.

- Contextual Handoff: When a gate is triggered, our agent doesn’t just stall. It packages the entire context the request, the reasoning, and the cited policy and presents it to your human expert in a clean dashboard for one-click review.

- Immutable Audit Logs: We record not just the action, but the approval. Every high-risk transaction carries the digital signature of the human who authorized it, ensuring full compliance traceability.

We design for the “Safe Enterprise,” where ai for companies accelerates work without accelerating risk.

Frequently Asked Questions

Does Liability Gating defeat the purpose of automation?

No. It focuses automation where it belongs: on the preparation of work, not the final execution of high-risk tasks. The AI still saves 95% of the effort by gathering data and filling forms; the human spends 5 seconds approving it instead of 30 minutes doing it.

Can we use Liability Gating for some departments but not others?

Yes. A robust ai software platform allows you to set different gates for different user groups. You might allow the IT team to auto-reset passwords (low risk) but require approval for the Finance team to change vendor details (high risk).

How do we know which actions should be gated?

Conduct a “Blast Radius” analysis. If an action went wrong 1,000 times in one minute, would it bankrupt the company or land you in court? If yes, gate it. If it would just be annoying (like scheduling wrong meetings), you can likely automate it.

Does Liability Gating prevent AI hallucinations?

It prevents hallucinations from becoming reality. The AI might still hallucinate a reason to fire someone, but the Liability Gate ensures that hallucination sits in a queue until a human sees it and rejects it.

What are the best ai tools for business regarding safety?

The best tools are those that decouple “reasoning” from “action.” Look for platforms that treat the LLM as a brain but use traditional code for the hands enforcing strict permissions and approval logic.

Is Liability Gating expensive to implement?

It is cheaper than the alternative. The cost of building approval workflows is negligible compared to the cost of a single erroneous wire transfer or a wrongful termination lawsuit caused by ungoverned ai in business.

Can Liability Gating protect against prompt injection attacks?

Yes. If a hacker tricks the AI into trying to export your customer database, the Liability Gate for “Data Export” will catch the attempt and flag it for human review, neutralizing the attack.