The “Dead-End Search” Problem

Consider the daily reality of your average employee in late 2025. They have a problem, perhaps a blocked invoice or a confused benefits enrollment status. They go to your enterprise portal, type in their question, and your GenAI chatbot delivers a beautifully written, perfectly summarized paragraph explaining the policy.

And then? The employee stops. The chatbot has given them the knowledge, but it hasn’t given them the outcome. The employee still has to open a new tab, log into SAP or Workday, navigate three menus, and apply the policy they just read.

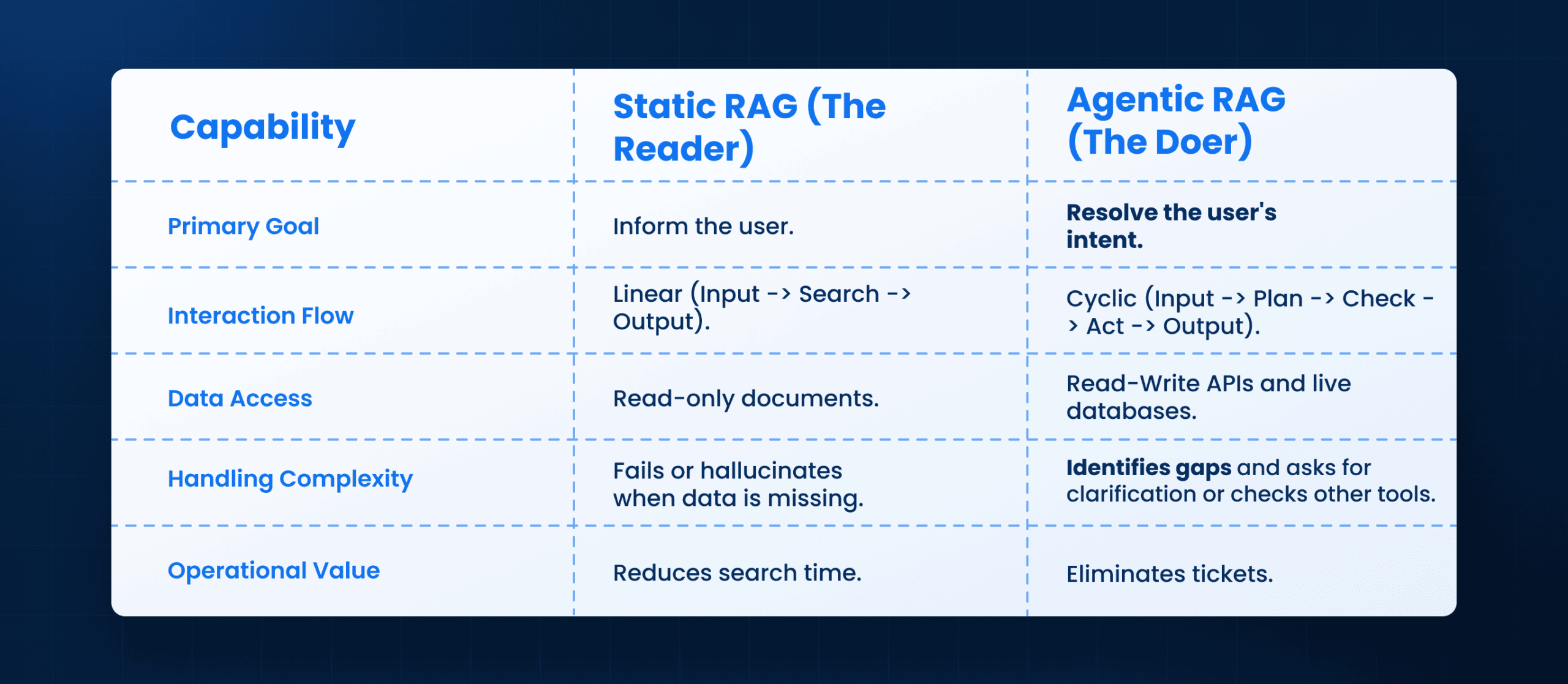

This is the “Dead-End Search.” Despite all the investments in vector databases and LLMs, your systems are still passive. They can read, but they cannot. For the CIO, this represents a massive inefficiency. You are paying for the search tool and the eventual helpdesk ticket that gets filed when the employee gets frustrated and gives up.

The operational shift for 2026 is not about better search results. It is about an agentic rag, a technology that bridges the “Last Mile” between knowing the answer and executing the solution.

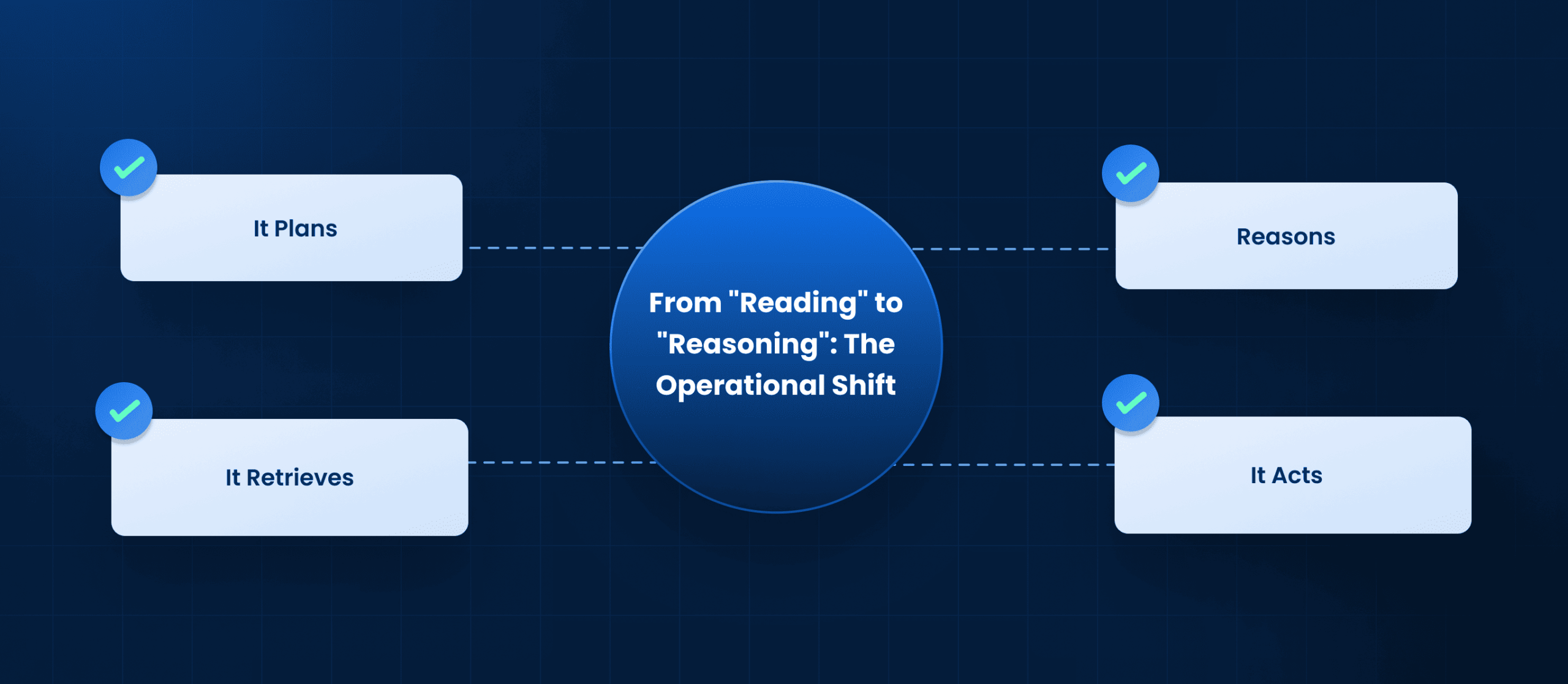

From “Reading” to “Reasoning”: The Operational Shift

To understand why agentic rag is capturing the attention of enterprise leaders, we have to look at system behavior.

Traditional Retrieval-Augmented Generation (RAG) is linear. It looks for text, matches keywords or semantics, and summarizes the findings. It is a “stateless” interaction; it doesn’t “think” about what comes next.

Agentic rag changes the fundamental behavior of the software. Instead of just retrieving information, the system adopts an iterative “loop” of reasoning. When it receives a query, it doesn’t just look for an answer; it plans a path to a resolution.

- It Plans: “To answer this, I need the policy PDF and the user’s live status.”

- It Retrieves: It fetches the document.

- Reasons: “The document says X, but the system status says Y. I need to resolve this conflict.”

- It Acts: It queries a third API or triggers a workflow to align the system with the policy.

For a technology head, this means your stack stops being a library and starts acting like an analyst. The definition of agentic rag in this context is simply software that refuses to stop at the summary.

Overcoming the “Context Wall”

One of the biggest failures of early AI pilots was the “Context Wall.” A bot could tell you how to reset a password but couldn’t tell you why it was locked.

Agentic rag breaks through this wall by treating your disparate systems ITSM, HRIS, ERP as extensions of its own memory. It doesn’t just “know” things; it can “go look” at things.

The “Agentic” Difference in Service Delivery

When you apply agentic rag to the gritty reality of IT and HR ticketing, the metrics change. You stop measuring “search success rate” and start measuring “zero-touch resolution.”

In a traditional setup, complex queries are routed to humans because machines lack the nuance to handle exceptions. Agentic rag thrives on exceptions.

Consider a complex IT request: “My laptop is slow and I need a replacement.”

- Standard AI: Pastes the “Hardware Replacement Policy.”

- Agentic RAG:

- Checks the asset management system for the laptop’s age.

- Checks the performance monitoring tool for recent CPU spikes.

- Checks the procurement budget for the user’s department.

- Synthesizes a response: “Your laptop is only 18 months old (policy requires 36), but I see critical CPU errors. I can open an exception ticket for you with these logs attached.”

This is the power of what is an agentic rag in practice: it automates the investigation, not just the conversation.

Trust, Governance, and “Chain of Thought”

For the CIO, the scariest word in enterprise tech is “autonomy.” Unsupervised automation creates risk. However, agentic rag actually offers better governance than standard black-box AI models.

The secret lies in “Observability.” Because agentic rag systems function by breaking tasks into steps, they generate a “Chain of Thought” log. They don’t just spit out an answer; they show their work.

Step 1: I searched the Knowledge Base.

Step 2: I found conflicting info.

Step 3: I verified with the HR API.

Step 4: I applied the ‘California’ rule set.

This digital paper trail allows technology leaders to audit the AI’s logic. You can see exactly why a decision was made. In 2026, this “explainability” will be the primary requirement for compliance in finance and HR automation. Trust is not assumed; it is audited.

Leena AI: Embedding Agentic RAG for the Enterprise

At Leena AI, we recognized early on that providing information was not enough for the modern enterprise. Employees don’t want to chat; they want to get back to work.

Our AI Colleagues leverage agentic rag to function as true operational partners. We don’t just index your SharePoint; we integrate with your backend reality.

- Deep Reasoning: When an employee asks about a payroll discrepancy, our AI Colleague uses agentic rag to cross-reference the pay slip, the time-tracking log, and the holiday policy to provide a mathematically accurate explanation, not a generic FAQ.

- Cross-System Action: If the reasoning phase identifies a genuine error, the agent can within strict permission guardrails initiate the correction workflow in the payroll system directly.

This approach transforms the AI from a “search bar” into a productive member of the team, capable of handling the end-to-end lifecycle of a request without human hand-holding.

The Hidden Value: Cleaning Your Data Swamp

There is a secondary, often overlooked benefit to deploying agentic rag. It acts as a continuous auditor of your enterprise knowledge.

When an agent tries to execute a task and fails because the documentation contradicts the system code, it flags that anomaly. It highlights the “rot” in your wiki pages.

For the CTO, this turns your service delivery platform into a data hygiene engine. Instead of a once-a-year manual audit of your knowledge base, you have thousands of autonomous agents testing the validity of your documentation every single day. This feedback loop ensures that your enterprise knowledge base remains a living, breathing, and accurate asset.

Conclusion: The End of “Good Enough” Automation

The era of “good enough” automation where a bot deflects a ticket by pasting a link is over. In 2026, the cost of human intervention is too high, and the patience of employees is too low.

Agentic rag is the technological response to this pressure. It represents a maturation of enterprise AI, moving from the novelty of conversation to the utility of execution. For the technology leader, the question is no longer “Can we find the data?” but “Can we trust the system to act on it?”

With agentic rag, the answer is finally yes.

Frequently Asked Questions

How does agentic rag handle conflicting information between documents and systems?

One of the core strengths of agentic rag is its ability to detect conflict. Unlike standard search, which might just pick the “most relevant” text, an agentic system can identify that the document says one thing while the live system API says another. It can then be configured to prioritize the “system of record” (live data) or flag the discrepancy for human review, preventing the spread of misinformation.

What is the agentic rag approach to security permissions?

Agentic rag architectures respect the existing entitlement layer of your enterprise. The “agent” inherits the access rights of the user engaging with it. It cannot “reason” over data the user isn’t allowed to see. If a user asks a question that requires sensitive Finance data they don’t have access to, the agent will decline the request, just as a human system administrator would.

Does implementing agentic rag require a new vector database?

Not necessarily. Agentic rag is more about the orchestration layer (the reasoning engine) than the storage layer. While it relies on vector databases for document retrieval, its primary value comes from its ability to connect that retrieval to other tools (APIs, SQL databases). It typically sits on top of your existing data infrastructure rather than replacing it.

Why is agentic rag considered safer than pure generative AI?

Pure generative models rely on training data that can be outdated or hallucinatory. Agentic rag grounds every response in retrieved context (your docs) and verifies it with tool usage (your APIs). This “grounding” significantly reduces the risk of the AI making things up, as it is constantly checking its facts against real-time enterprise data.

Can agentic rag reduce software licensing costs?

Indirectly, yes. By enabling users to resolve complex tasks through a unified chat interface, you may reduce the number of “full-seat” licenses needed for complex backend systems. Occasional users can interact with the system via the agentic rag interface (within the limits of API calls) without needing to log in to the expensive core platform directly.

What is agentic rag doing during the thinking pause?

When an agentic rag system pauses before answering, it is executing a “Chain of Thought.” It is decomposing the user’s query into sub-tasks, selecting the right tools (e.g., “Search Wiki” vs. “Check Jira”), executing those tool calls, and synthesizing the results. It is effectively “planning” the answer rather than just predicting the next word.

Why are legacy system integrations a major challenge for scaling Agentic AI?

Legacy enterprise systems were not built for real-time, autonomous interactions. They often lack the modular APIs and robust identity management that a true Agentic AI platform needs to securely and reliably execute complex, multi-step workflows across the entire enterprise. Modern, open platforms are essential to scale Agentic AI.