Managing Variable Costs for AI in Finance Industry Helpdesks

Real Enterprise Bottleneck

In 2026, the economic model of enterprise support has fundamentally shifted. For decades, CIOs purchased ticketing systems via fixed-price licenses per agent or per employee. You knew in January exactly what the bill would be in December.

The introduction of Generative AI has broken this predictability. When you deploy ai in finance industry internal support tools, you are no longer paying for software access; you are paying for “compute per thought.” Every time an employee asks a question, the system consumes tokens to retrieve, process, and generate an answer.

The operational bottleneck arises when variable consumption clashes with fixed operating budgets.

- The “Bill Shock” Phenomenon: A sudden spike in complex queries (e.g., during tax season or M&A activity) causes the monthly invoice to triple without warning.

- Shadow Costs: Departments deploy local “assistants” without realizing that processing large PDF policy documents costs significantly more than simple database lookups.

- Lack of Governance: Most enterprises lack the “metering” infrastructure to track which department is consuming the most inference compute, making chargebacks impossible.

This is not a technology problem; it is a financial operations (FinOps) problem. The technology works, but the cost model is bleeding the operational budget dry.

AI in Finance Industry — Workflow Breakdown

To control costs, you must understand the operational workflow of token consumption. You cannot manage what you do not measure. In an artificial intelligence in finance context, the cost is incurred in three specific stages.

Stage 1: Intent Classification & Routing (The Gatekeeper)

- The user submits a query.

- A lightweight, low-cost model analyzes the “intent.”

- Operational Check: Is this a simple “password reset” (low cost) or a complex “variance analysis” (high cost)?

- Cost Driver: If you skip this step and send every query to your most powerful model, you are overspending by roughly 600%.

Stage 2: Retrieval and Context Loading (The Heavy Lifter)

- For ai in banking and compliance scenarios, the system must retrieve internal documents (RAG).

- The system searches the vector database and pulls relevant chunks of text.

- Operational Check: How much text is being pulled? Reading a 100-page regulatory PDF to answer one question is the primary cause of cost spikes.

Stage 3: Generation and Reasoning (The Output)

- The model processes the retrieved context and generates an answer.

Operational Check: Is the model configured to be concise? Verbose models that write “polite” paragraphs cost more than models that output bulleted lists.

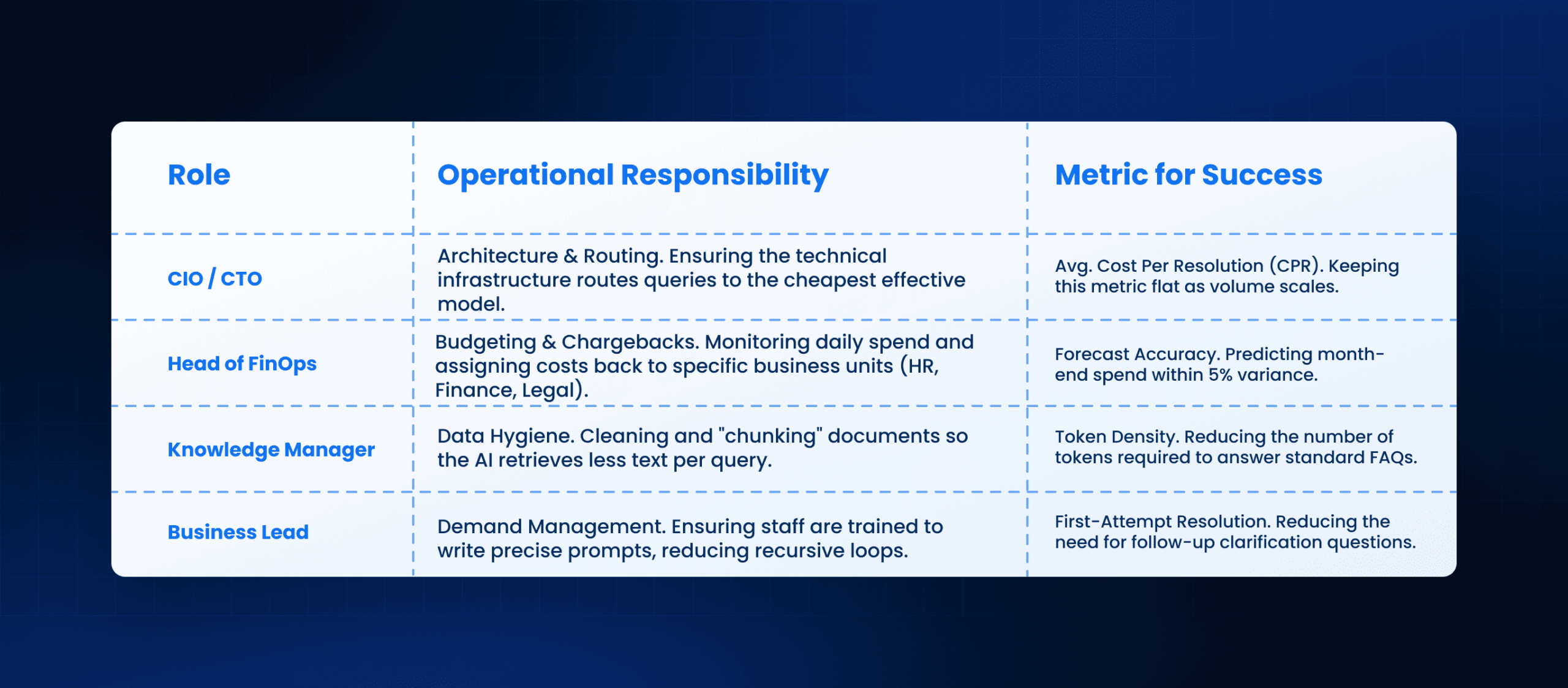

AI in Finance Industry — Dependencies & Ownership

Managing these variable costs requires a new governance structure. It is no longer solely an IT problem; it is a shared responsibility between IT, Finance, and the Business Units.

Failure to define these dependencies leads to the “tragedy of the commons,” where every department overuses the ai in financial services tools because they are not directly paying the bill.

AI in Finance Industry — Scale Failure Points

As you scale artificial intelligence and finance support across the enterprise, distinct failure points emerge that threaten budget stability. These are not theoretical; they are the most common reasons why deployments get paused or cancelled in large US enterprises.

Failure Point A: The “Recursive Loop” Trap

- Employees often ask vague questions.

- Poorly configured agents will enter a loop of asking clarifying questions.

- Result: A single issue generates 50+ interactions, driving up token costs by 10x without resolving the user’s problem.

Failure Point B: The “Compliance Over-Fetch” (AI in Accounting and Finance)

- In ai in accounting and finance workflows, accuracy is paramount.

- To ensure accuracy, engineers often configure the system to retrieve too much context (e.g., “Read the entire 2025 Tax Code just in case”).

- Result: You are paying to process thousands of pages of text for queries that only need one specific clause.

Failure Point C: Lack of Degradation Protocols

- Most systems are binary: they are either “on” or “off.”

- When the monthly budget cap is hit, the system shuts down, causing a service outage.

Result: Operational chaos. You need a “degradation mode” that switches to cheaper, keyword-based search when the budget is tight, rather than turning off completely.

AI in Finance Industry — Implementation Realities

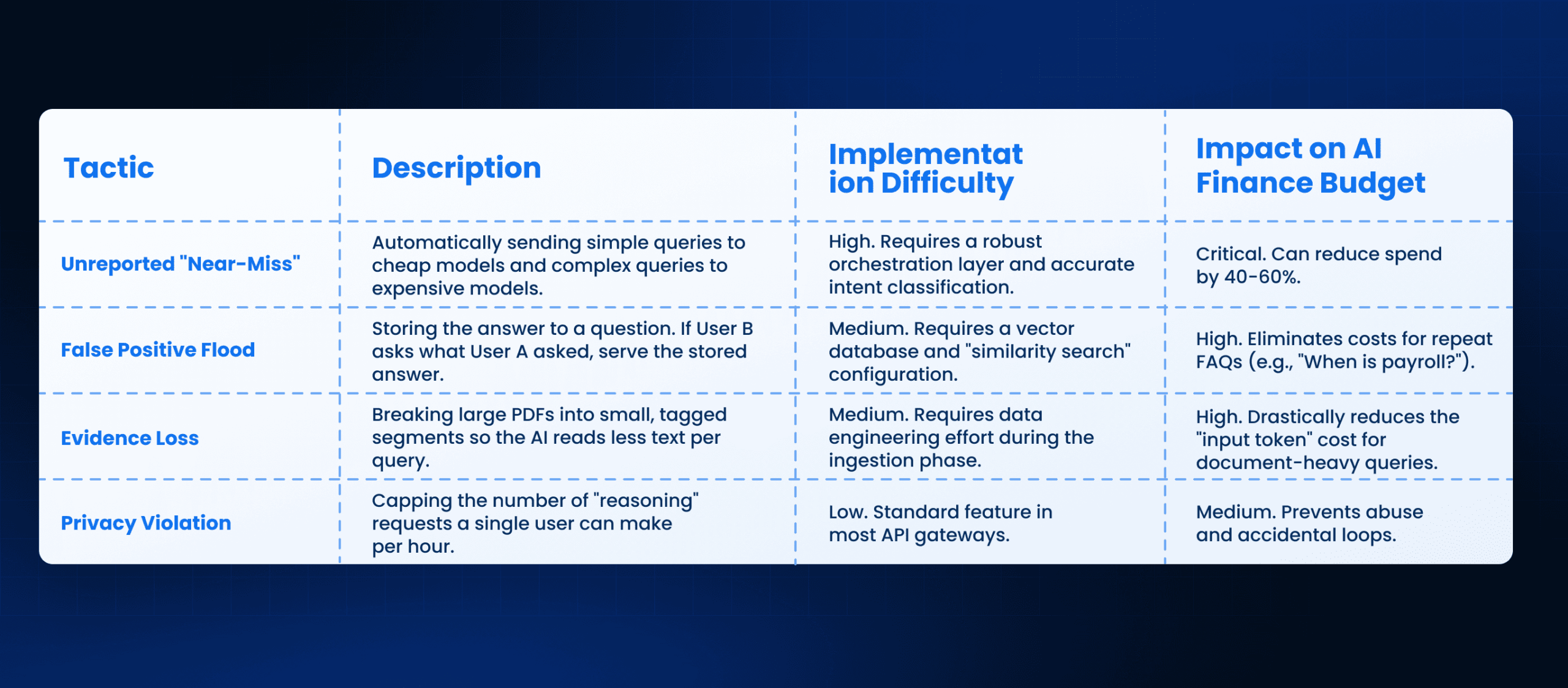

To fix these issues, you must implement specific tactical controls. The goal is to move from “paying for everything” to “paying for value.”

This involves three primary implementation strategies: Model Routing, Semantic Caching, and Data Chunking. These are the levers you can pull to lower your ai in finance industry bill.

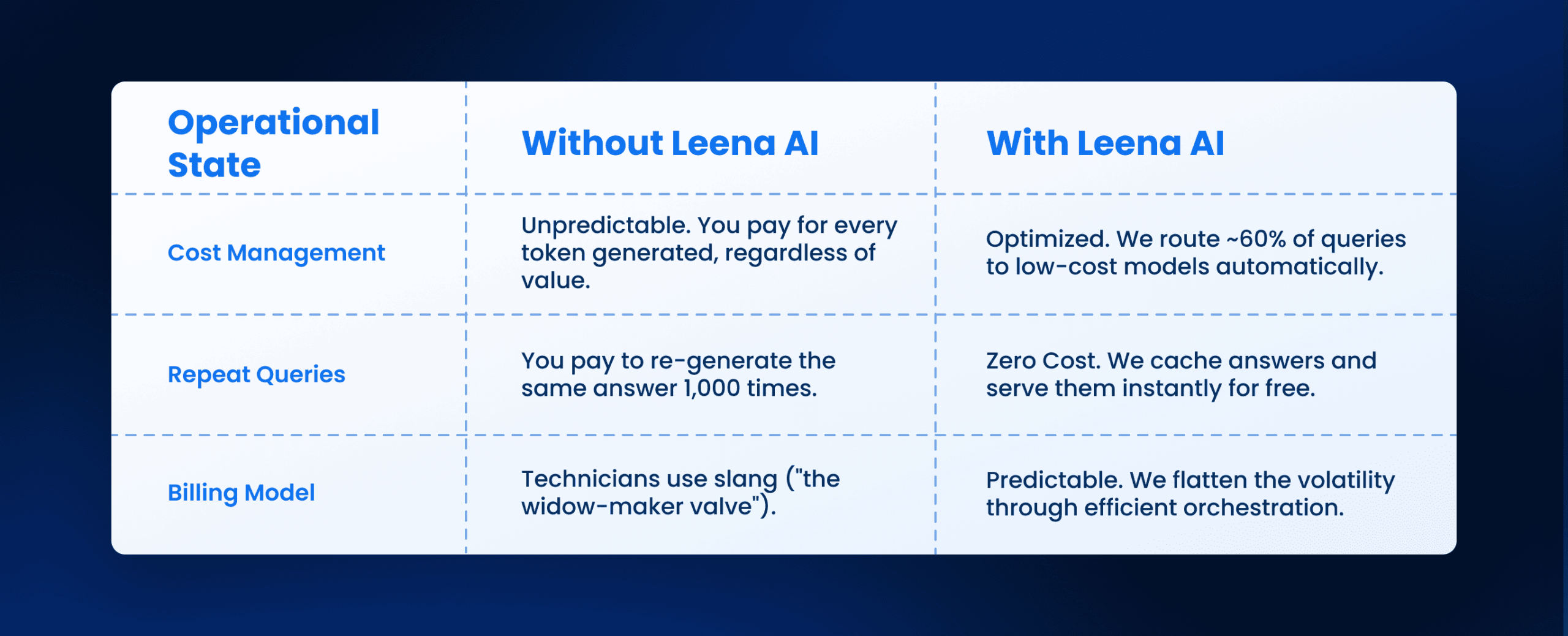

How Leena AI Operationalizes This

At Leena AI, we do not treat inference costs as an afterthought. We provide the operational discipline required to run ai in finance industry systems at scale without budget surprises.

We act as the orchestration layer that sits between your employees and the raw compute models. We handle the routing, caching, and limiting so your team does not have to build these controls from scratch.

Leena AI Operational Impact

Conclusion

The transition to ai in finance industry support systems requires a fundamental change in how enterprise IT budgets are managed. You are no longer buying software; you are managing a utility.

On Monday morning, you should request an audit of your current token consumption. Identify which departments are driving the highest usage and verify if “simple” queries are being routed to expensive models. If you do not have visibility into this data, your first step is to implement a metering and orchestration layer.

Control the variable costs now, or they will control your roadmap later. The organizations that succeed in 2026 will be those that treat inference compute as a finite, managed resource, not an infinite tap.

Frequently Asked Questions

How do we prevent the AI from giving dangerous advice?

Strict “Grounding” is required. The AI in manufacturing system should be configured to only answer based on uploaded manuals and verified logs. If the answer is not in the data, it must answer “I do not know,” rather than guessing.

Can AI really understand messy technician notes?

Yes. Modern Large Language Models (LLMs) are exceptionally good at deciphering typos, slang, and shorthand, provided they are fine-tuned on a sample of your specific industry data.

Will senior technicians refuse to use this?

Resistance is common. Success depends on UX. If the tool is easier to use than the old keyboard-based logging system (e.g., voice-based), adoption rates increase.

How do we handle different languages on the shop floor?

Artificial intelligence in manufacturing tools usually support real-time translation. A Spanish-speaking technician can dictate a note in Spanish, and it can be queried in English by a supervisor.

Is this legal from a labor union perspective?

You must consult with union representatives. Typically, if the tool is positioned as “safety and training support” rather than “performance monitoring,” it is accepted. Transparency is key.

What is the ROI timeline?

The ROI is often realized in the avoidance of a single major outage. If the system prevents one 4-hour shutdown of a critical line, it often pays for the annual implementation cost immediately.