AI in Industrial Automation: Automating Safety Compliance & HR Triage

Enterprise Failure Scenario: The “Invisible” Near-Miss

In a modern US manufacturing plant, a forklift operator takes a corner too fast, narrowly missing a maintenance technician by inches. The technician jumps back; the forklift driver slams the brakes. Both are shaken, but physically unharmed.

In 90% of enterprises, this event a “near-miss” never enters the corporate record. The operator fears disciplinary action, and the technician doesn’t want to spend 45 minutes filling out a paper incident report or logging into a clunky legacy portal. They exchange a nod, get back to work, and the risk remains hidden.

Two weeks later, that same blind corner, combined with the same aggressive driving behavior, results in a fracture. Now, the enterprise faces a workers’ comp claim, an OSHA investigation, and a potential lawsuit.

The failure here is not a lack of sensing. The facility likely had AI-powered industrial automation cameras that “saw” the near-miss. The failure is the disconnect between the Operational Technology (OT) on the floor and the Information Technology (IT) in HR and Risk management. The sensor detected the danger, but the data died in a local server log, leaving leadership blind to the leading indicators of a major accident.

AI in Industrial Automation — Why This Fails at Scale

The promise of AI in industrial automation is often sold as “smarter machines.” However, for the CIO and HR leadership, the real value lies in “smarter workflows.” The current failure at scale happens because valid safety signals are trapped in silos.

We have deployed sophisticated Computer Vision (CV) models that can identify a worker not wearing a hard hat or a vehicle entering a geofenced “red zone.” Yet, in most Smart factory automation with AI setups, these systems are designed only for local alerts flashing a light or sounding a buzzer. They rarely trigger the enterprise-level workflows necessary for compliance and remediation.

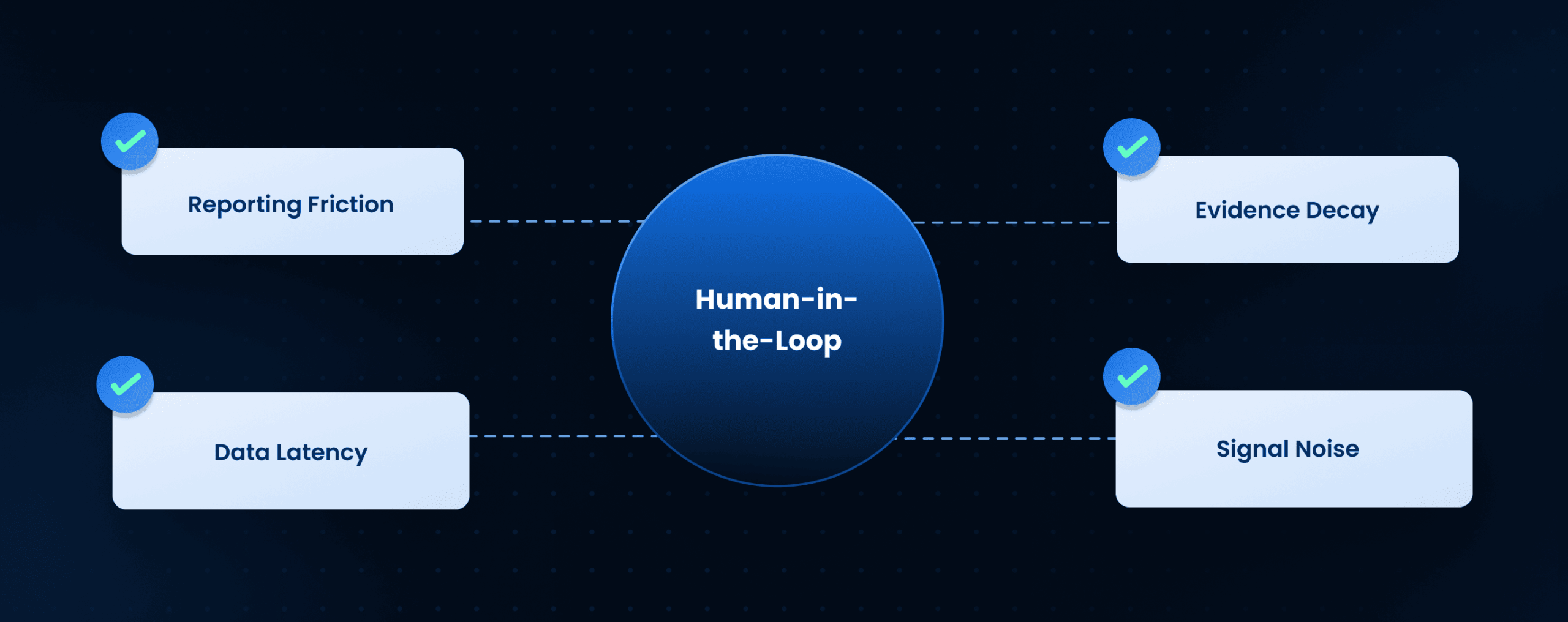

Why the “Human-in-the-Loop” Model Breaks Down:

- Reporting Friction: Relying on manual data entry for near-misses guarantees under-reporting. If the process is harder than the perceived value of reporting, workers will ignore it.

- Data Latency: Even if a floor manager reviews security footage and logs the incident, the data often takes days to reach the Central Safety Office. By then, the footage may be overwritten, and the “teachable moment” is lost.

- Evidence Decay: Industrial AI solutions often overwrite high-definition video buffers every 24–48 hours to save storage. If the HR ticket isn’t created instantly, the objective proof of the incident vanishes, turning the investigation into a “he-said-she-said” debate.

- Signal Noise: Without AI filtering, safety teams are overwhelmed by raw data. They need Intelligent automation systems that distinguish between a true safety violation and a harmless anomaly, passing only actionable tickets to human review.

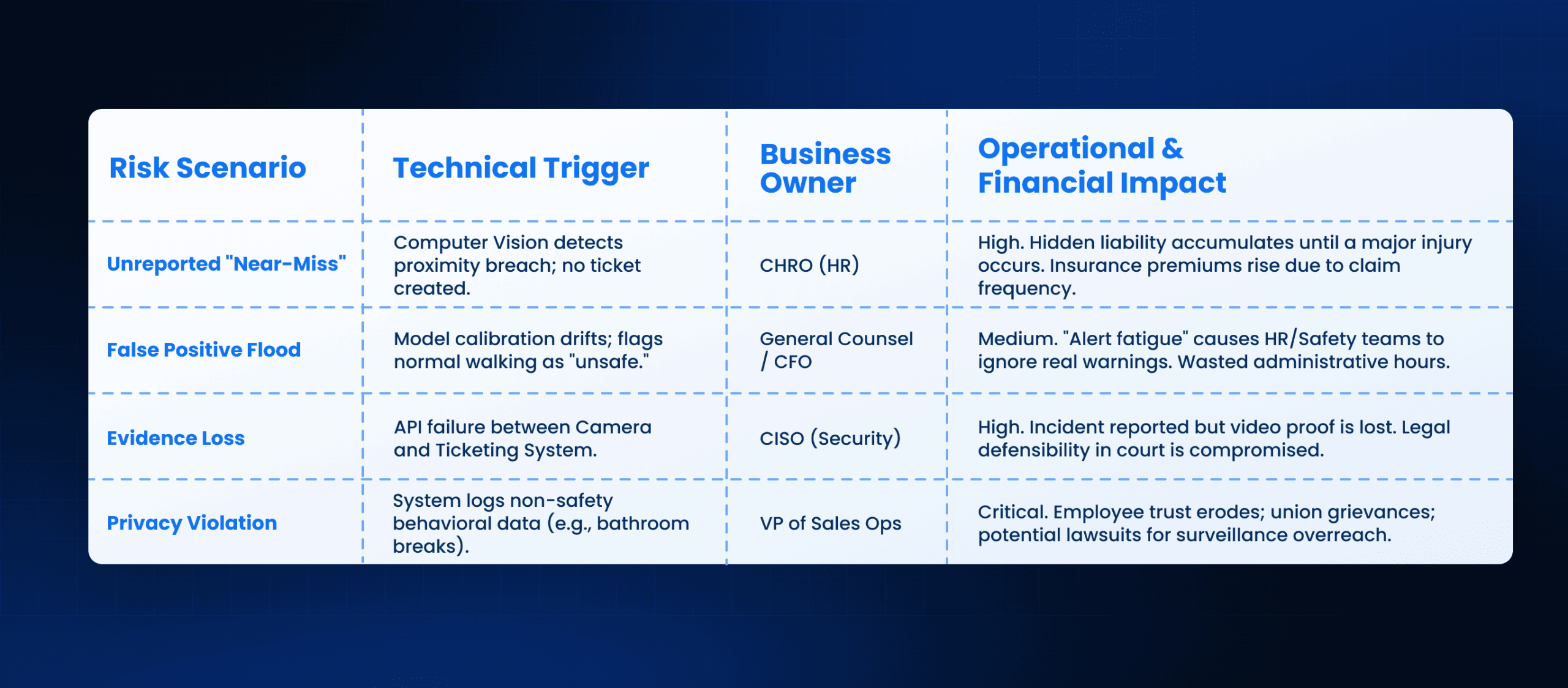

AI in Industrial Automation — Risk Map

Implementing AI in industrial automation for safety is not just an IT project; it is a risk management strategy. When cameras and sensors act as the “eyes” for HR and Compliance, you change the liability landscape of the organization.

The goal is to move from “reactive liability” (paying for the injury) to “proactive remediation” (fixing the behavior). However, connecting these systems introduces specific risks that must be mapped and owned.

Operational Risk Map for Automated Safety Triage

AI in Industrial Automation — Governance Gaps

The integration of AI in process automation with human safety data creates significant governance gaps that most 2026 enterprise policies are not written to handle. The primary friction point is the boundary between “safety monitoring” and “employee surveillance.”

If the same camera that ensures a worker is wearing a safety vest is also used to track how many minutes they spend standing still, the system will be rejected by the workforce. AI-driven manufacturing automation must have strict governance walls.

Key Governance Blind Spots:

- Consent and Transparency: Employees must know exactly what triggers an automated HR ticket. The logic cannot be a “black box.” If Machine learning in industrial automation is used to judge behavior, the criteria must be public and auditable.

- Bias in Detection: Computer vision models trained on specific datasets may have higher error rates with different lighting conditions or demographics. A model that consistently flags valid behavior as “unsafe” for a specific shift or demographic creates a legal discrimination risk.

- Data Retention Policies: How long do you keep the video clip of a near-miss? If you keep it forever, it becomes a liability in future discovery phases. If you delete it too soon, you cannot defend against a claim. Automated retention policies must be hard-coded into the AI for predictive maintenance of safety standards.

- The “Right to Correct”: If an AI agent automatically places a “safety violation” letter in an employee’s HR file, there must be a seamless, automated workflow for that employee to contest the finding and have a human review the footage.

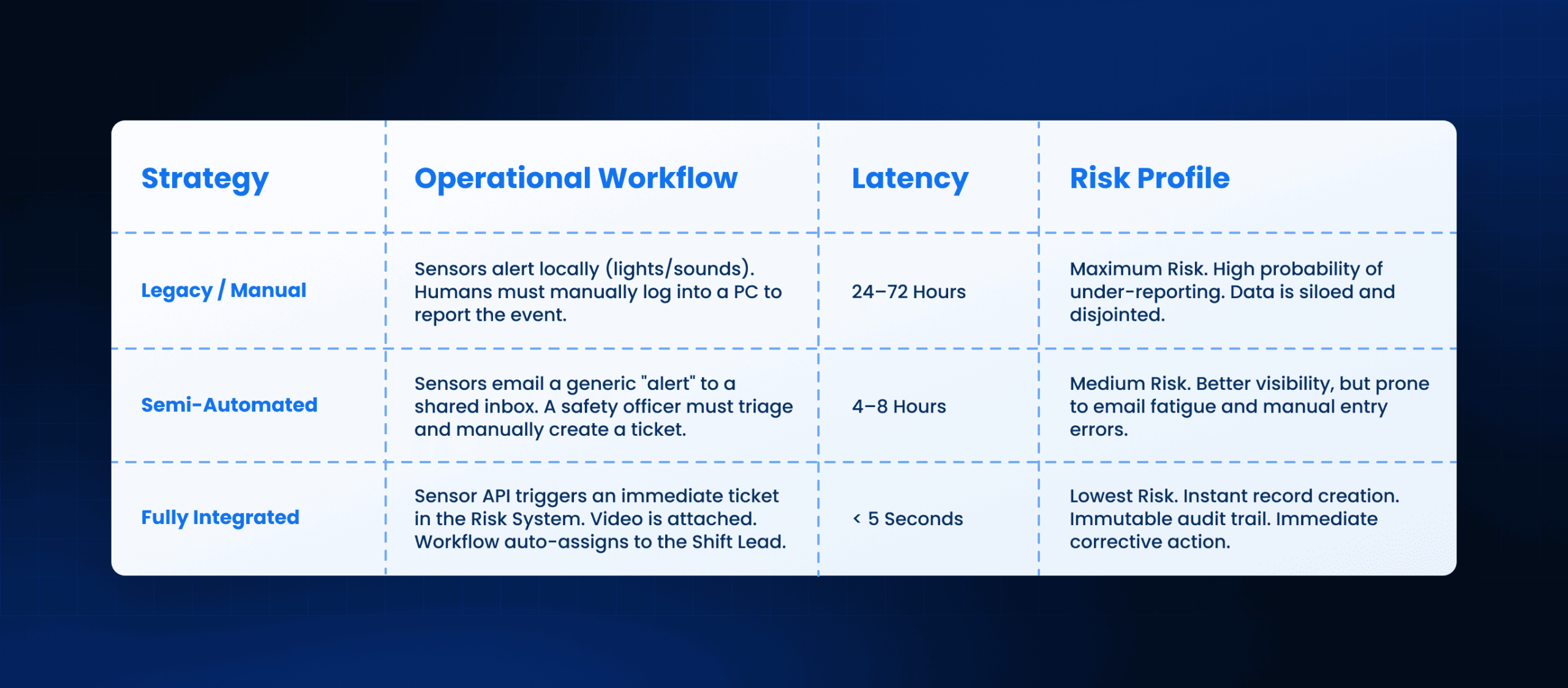

AI in Industrial Automation — Mitigation Choices

Enterprises have three choices when deciding how to handle the data generated by their shop-floor sensors. The choice determines whether ai in industrial automation becomes a strategic asset or a chaotic liability.

The move from manual to fully integrated compliance is where the return on investment (ROI) regarding insurance premiums and lawsuit avoidance is realized.

Mitigation Strategy Comparison

How Leena AI Designs for Safety and Control

At Leena AI, we do not build the industrial sensors; we build the intelligence that acts on their signals. We operationalize the safety data to ensure it results in action, not just storage.

Our Design Principles for Safety Triage:

- Instant Incident Creation: When a visual sensor confirms a safety breach, Leena AI automatically generates a ticket in the HR/EHS system, populating time, location, and severity fields without human intervention.

- Contextual Routing: We route the alert immediately to the correct floor manager and safety officer based on the specific location and machine type involved.

Privacy-First Handling: Our workflow ensures that only safety-relevant clips are attached to the permanent record, discarding non-relevant footage to protect employee privacy.

Conclusion

The true ROI of AI in industrial automation is not just in making machines run faster; it is in making the manufacturing environment safer and more compliant. By automating the triage of safety incidents, CIOs and HR leaders can close the dangerous gap between the physical reality of the factory floor and the administrative reality of the corporate office.

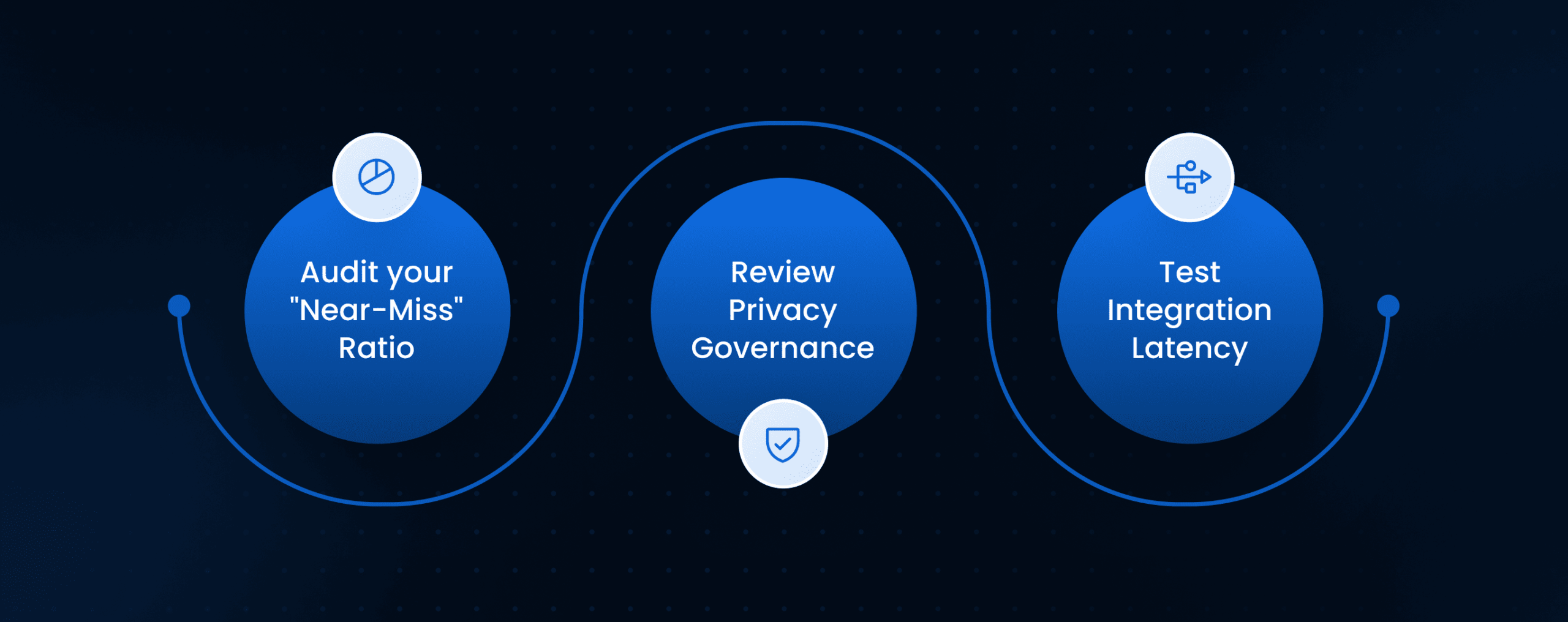

Decision Checklist for Monday Morning:

- Audit your “Near-Miss” Ratio: If you have zero near-misses reported but high injury rates, your reporting system is broken.

- Review Privacy Governance: Ensure your camera policies explicitly state how safety data is distinguished from surveillance data.

- Test Integration Latency: Measure how long it takes for a sensor detection to become an actionable HR ticket. If it’s more than an hour, automation is required.

Stop relying on shaken employees to fill out paperwork. Let the intelligent system handle the compliance, so the humans can focus on the culture.

Frequently Asked Questions

How does AI in industrial automation reduce insurance premiums?

By capturing “leading indicators” (near-misses) automatically, companies can demonstrate proactive risk management to insurers. Proving that you identify and fix risks before injuries occur can significantly lower liability premiums.

Is it legal to use AI to automatically log employee safety violations?

Generally, yes, provided the monitoring is disclosed and limited to safety/compliance in the workspace. However, using this data for productivity tracking without consent often violates labor laws or union contracts.

What happens if the AI triggers a false alarm?

The system should include a “Human-in-the-Loop” review step. The AI creates a draft incident report. A human manager reviews the attached clip and confirms or rejects the ticket before it becomes part of the permanent HR record.

Can this integrate with legacy SCADA systems?

Yes. Modern AI-powered industrial automation layers can sit on top of legacy SCADA data streams. If the SCADA system can output a signal (via API, OPC-UA, or even email), an intelligent agent can ingest it and trigger a workflow.

How do we prevent alert fatigu for safety managers?

By tuning the confidence thresholds of the AI in process automation models. We configure the system to only escalate incidents with a high probability (e.g., >90% confidence) of being a safety breach, logging lower-confidence events for weekly batch review instead of instant alerts.

Does this require replacing our existing cameras?

Not necessarily. Many Industrial AI solutions are software-defined and can process video feeds from existing IP cameras (RTSP streams), provided the network bandwidth and compute power are sufficient.