AI in Information Technology: Avoiding Intelligence Lock-In

It is Q4 of 2026. You are the CIO of a logistics giant. Two years ago, you went “all in” on your ITSM vendor’s native “AI Agent” platform. You celebrated the wins: AI-powered IT operations were resolving 40% of tickets autonomously, and your service desk costs were down.

Then, the renewal contract arrived. The price has tripled.

You decide to migrate. You call your VP of Infrastructure and ask, “How long to move our workflows to a new, more cost-effective platform?”

The silence is deafening. She tells you, “We can’t move. We don’t own the workflows anymore.”

This is the nightmare scenario of AI in Information Technology in 2026. In the past, migrating meant moving database rows and re-writing some scripts. Today, your core business logic your approval routing, your risk assessments, your troubleshooting steps doesn’t exist in code. It exists as proprietary “prompts” and “agent configurations” buried inside a vendor’s black box. You haven’t just locked in your data; you have locked in your company’s intelligence.

As we scale AI in Information Technology, we must address this critical governance failure: Vendor Intelligence Lock-In.

The Trap of Proprietary AI-Driven IT Automation

The promise of AI in Information Technology was freedom from drudgery. But for many enterprises, it has become a new form of digital handcuffs.

In 2024, when we wrote a script to restart a server, we owned that script. It was Python or PowerShell. We could run it anywhere. In 2026, when we configure a SaaS vendor’s agent to “autonomously manage server health,” we are training their model. We are giving them our operational nuances, our edge cases, and our decision trees.

This creates a dangerous dependency. The “brain” running your AI-driven IT automation belongs to the vendor. If you try to leave, you leave the brain behind. You are left with a raw database and a workforce that has forgotten how to do the manual tasks the agent handled for the last two years.

The Hidden Risks in AI for Incident Management

This lock-in is most acute in AI for incident management. Consider a scenario where your vendor’s AI agent handles a “Server Outage” alert. Over two years, your team has reinforced this agent via feedback loops (RLHF). The agent now “knows” that for the Dallas datacenter, it needs to check the cooling system first, but for the London datacenter, it checks the power grid.

That knowledge is not documented in a wiki. It is encoded in the weights and context window of the vendor’s proprietary model.

If that vendor suffers an outage, changes their privacy policy, or hikes their prices, you are stuck. You cannot “export” that reinforced learning to an open-source model or a competitor. You have essentially outsourced the cognitive load of your IT operations to a landlord who can evict you at any time.

Strategies to Regain Control of Your Intelligent IT Support Systems

To survive the 2026 vendor landscape, CIOs must adopt a “Sovereign Intelligence” strategy. We must treat business logic as a strategic asset that must remain portable, regardless of the underlying execution platform.

- Decoupling Reasoning from the System of Record

The most effective defense is architectural decoupling. Do not let the System of Record (your ITSM or HRIS) also be the System of Intelligence.

Your intelligent IT support systems should utilize an “Abstraction Layer.” In this model, the AI agent lives in a neutral layer that you control. It connects to ServiceNow, Jira, or Workday via APIs to fetch data and perform actions, but the reasoning happens on your terms.

If you decide to switch your ticketing system, you simply point your AI agent at the new API. The agent and all the business logic it has learned stays with you.

- Predictive IT Analytics and Data Sovereignty

Data is the fuel for predictive IT analytics. But where does that data live? If your vendor’s AI is generating insights like “predicting drive failure,” ensure you have raw access to the underlying event logs and the prediction logic.

Demand contracts that guarantee “Model Portability” or at least “Training Data Export.” If you spend years correcting an AI’s predictions, you are creating a valuable dataset. You must ensure that this dataset belongs to you, not the vendor’s product improvement team.

- Designing AI Chatbots for IT Helpdesk with Portability in Mind

When deploying AI chatbots for IT helpdesk, avoid using the vendor’s proprietary “drag-and-drop” bot builders for complex logic. These are the ultimate lock-in traps.

Instead, implement “Function Calling” architectures. The chatbot should act as a router, identifying intent (e.g., “Reset Password”) and then calling an external, standardized API that you host to execute the task. This ensures that your core operational capabilities are not trapped inside a chat interface that you cannot control.

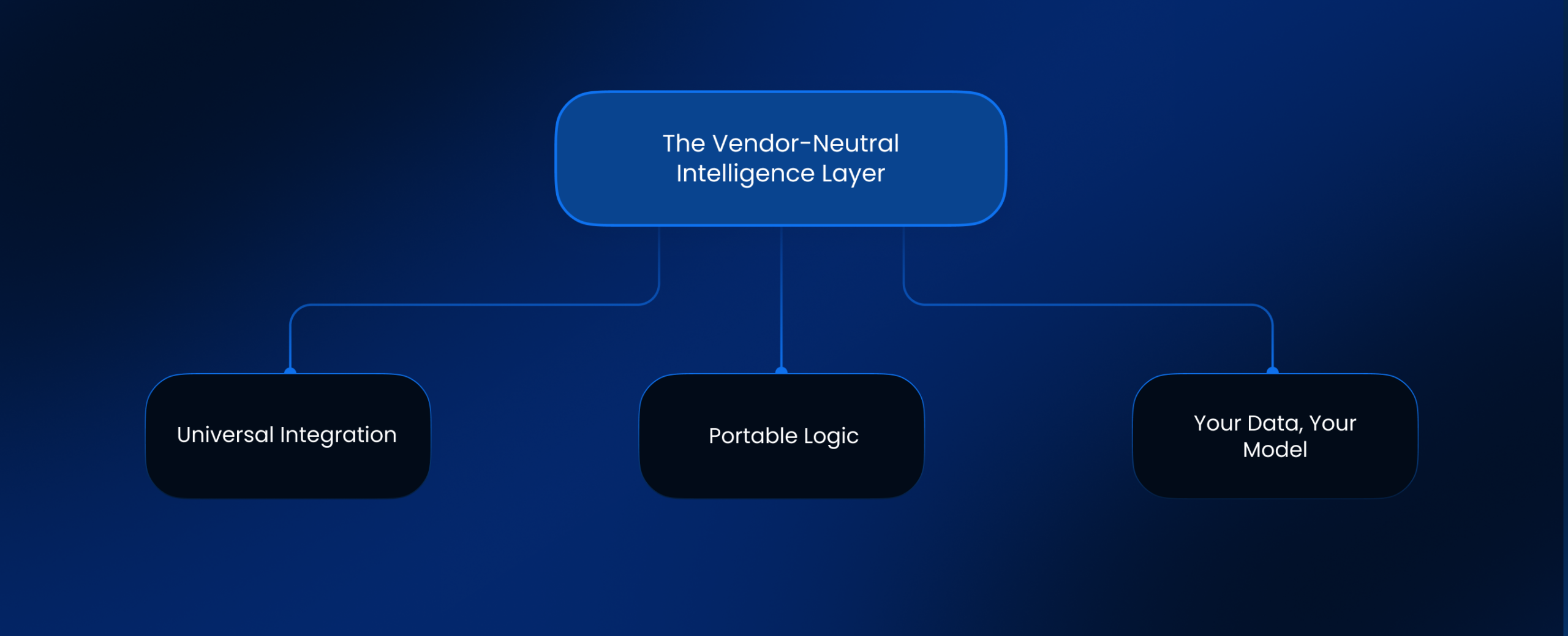

Leena AI: The Vendor-Neutral Intelligence Layer

At Leena AI, we foresaw the risk of “Intelligence Lock-In” years ago. We believe that an enterprise’s intelligence should belong to the enterprise, not the software vendor.

We architect Leena AI as a strictly vendor-neutral orchestration layer. Our proprietary large language model, WorkLM, sits above your stack.

- Universal Integration: We connect to ServiceNow, Jira, Freshservice, and proprietary internal tools equally well.

- Portable Logic: The workflows and resolutions our agents learn are stored in your instance of Leena AI, not deeply embedded in the backend system. If you migrate from one ITSM to another, Leena AI simply adapts the connectors, keeping your automation and employee experience 100% consistent.

- Your Data, Your Model: We train our models on your specific enterprise data, but we isolate that training. You are not just improving a generic product; you are building a proprietary asset for your own IT organization.

We provide the freedom to choose best-of-breed backend systems without losing the “brain” that runs them.

Frequently Asked Questions

What is the difference between traditional vendor lock-in and intelligence lock-in?

Traditional lock-in is about data gravity it is hard to move terabytes of records. Intelligence lock-in is about process gravity. It is hard to move the “decision-making” capability that an AI has learned over time, leaving you operationally crippled if you switch vendors.

How does AI work in IT without creating lock-in?

It works by using an “Overlay Architecture.” You keep your data in the System of Record (like a database), but you keep the AI reasoning in a separate, agnostic layer. The AI reads from the database but doesn’t live inside it.

What are the best applications of artificial intelligence for avoiding lock-in?

The best applications are those that use open standards (like OpenTelemetry for observability) or agnostic orchestration platforms. Tools that allow you to “bring your own model” (BYOM) are safer than those that force you to use a proprietary, black-box model.

Can we use AI-powered IT operations tools from major vendors safely?

Yes, but use them for commodity tasks (like summarizing a ticket), not for core business logic (like deciding who to approve a high-risk access request for). Keep your “secret sauce” logic in a layer you control.

How do AI chatbots for IT helpdesk contribute to lock-in?

If you build complex conversation flows inside a specific vendor’s chatbot tool, you cannot export those flows. If you leave that vendor, you have to rebuild every conversation from scratch.

What is predictive IT analytics' role in migration?

If you own your historical data, you can retrain a new predictive model relatively quickly. If your vendor aggregates your data and only gives you the final “score,” you lose that predictive capability instantly upon contract termination.

Is open-source AI a solution to this problem?

It can be. Running open-source models (like Llama or Mixtral) on your own infrastructure ensures you own the model weights. However, this comes with higher infrastructure management costs compared to SaaS.