Introduction

When enterprises adopt “AI Colleagues” — autonomous agents that carry out back-office workflows — they are not merely augmenting human work: they are entrusting business processes to software that acts. That shift raises the stakes dramatically. Without strong governance, transparency, and auditability, these agents risk errors, misuse, or regulatory exposure.

CIOs, CTOs, and enterprise technology leaders must ask: How do we trust AI that acts on our behalf? And more importantly: How do we monitor, enforce, and transparently explain every step of its decisions?

At Leena AI, we built with those questions in mind from the very beginning. Below, we frame why transparency and governance are non-negotiable for agentic AI in the enterprise — and how Leena AI provides a trust architecture that delivers in practice.

Why Governance & Transparency Must Be Top Priorities

Trust isn’t optional; it’s the foundation

When AI is executing tasks, not just advising, users, auditors, and executives demand accountability. If you can’t trace why the AI made a decision, confidence breaks down. Gartner’s work on AI ethics emphasizes that governance platforms providing transparency are core to enabling accountability and user trust. (Gartner)

In the boardroom, the Harvard Business Review has described AI as “testing the limits of corporate governance,” urging boards to push for oversight, clarity of decision-making processes, and accountability frameworks. (Harvard Business Review)

Autonomy magnifies risk

With autonomous agents, every misstep can cascade. A seemingly benign workflow error can spawn data leaks, bias propagation, compliance violations, or operational damage. Gartner’s AI TRiSM (Trust, Risk, Security, and Management) framework underscores that runtime enforcement, policy checks, and continuous inspection are essential in governance. (Gartner)

As organizations move from narrow to fully agentic systems, the complexity of risk escalates sharply; traditional oversight models don’t scale. (Harvard Business Review)

Regulatory, legal, and board pressures are rising

Regulatory bodies are increasingly demanding explainability, audit trails, and accountability in AI systems. Gartner predicts that by 2027, governance will be mandatory in sovereign AI regulation. (CDO Magazine)

Boards and audit committees are already being called on to oversee AI at a strategic level. Many public companies are expanding existing risk and audit committees to assume AI oversight. (corpgov.law.harvard.edu)

A recent article argues that boards must adopt a “noses in, fingers out” approach — staying apprised of AI risks and policies without micromanaging operations. (corpgov.law.harvard.edu)

Embedding governance is more effective than bolting it on

One of the common failures in AI adoption is treating governance as an afterthought. Gartner contends that governance must be embedded in AI systems’ lifecycle — not applied retroactively — if it is to be effective. (Atlan)

In agentic systems, where decisions are granular, you must govern at each micro-step — not just at coarse checkpoints. Without that fine control and observability, blind spots emerge.

Leena AI’s Trust Architecture: How We Deliver Governance by Design

At Leena AI, transparency and governance are not optional modules — they are foundational. Below is how we build trust into every AI Colleague we deploy.

1. Real-time fact-checking LLM that enforces policies

Before any AI Colleague acts, an internal fact-checking layer evaluates whether the proposed action adheres to your operational rules (AOPs, or agent operating procedures).

This is not post-mortem logging; it’s proactive enforcement. If a step contradicts policy, it’s blocked before execution. That ensures your policies are honored 100% of the time, even under evolving prompts or instructions.

2. Built-in guardrails that confine behavior to the prescribed lane

Each AI Colleague ships with structural guardrails. These are constraints encoded at the logic level, preventing the agent from wandering outside explicit operational boundaries.

Because guardrails are baked in, not optional, agents cannot deviate into unsafe or unintended territory — unless that boundary is expanded intentionally.

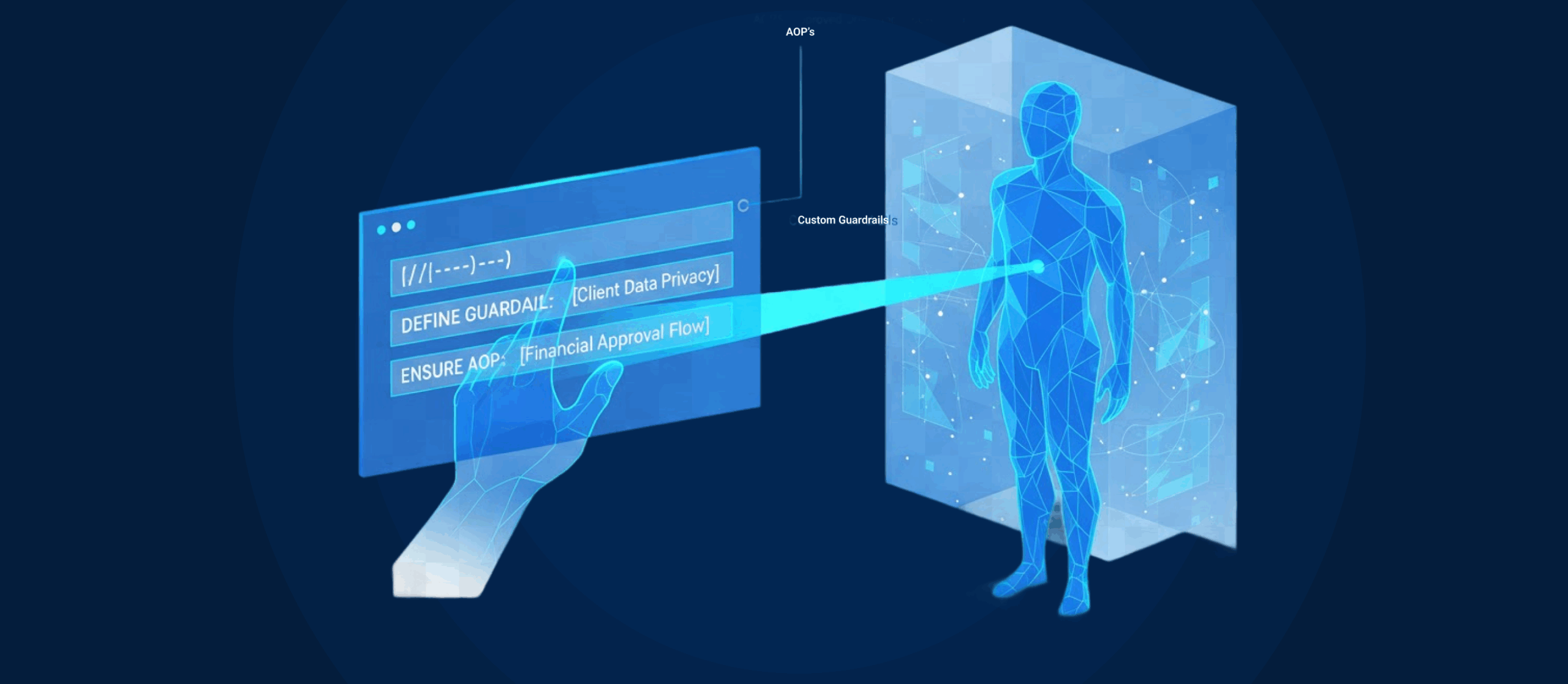

3. Customer-defined guardrails and policy incorporation

Leena AI doesn’t rely solely on our built-in constraints. We give enterprises the power to incorporate their own guardrails into each AI Colleague via prompt-based or policy-defined logic.

If your legal team wants to disallow certain actions or HR requires extra checks for particular workflows, you can define those rules. The AI colleague then operates within the union of default and custom guardrails, maintaining full compliance with your organization’s policy regime.

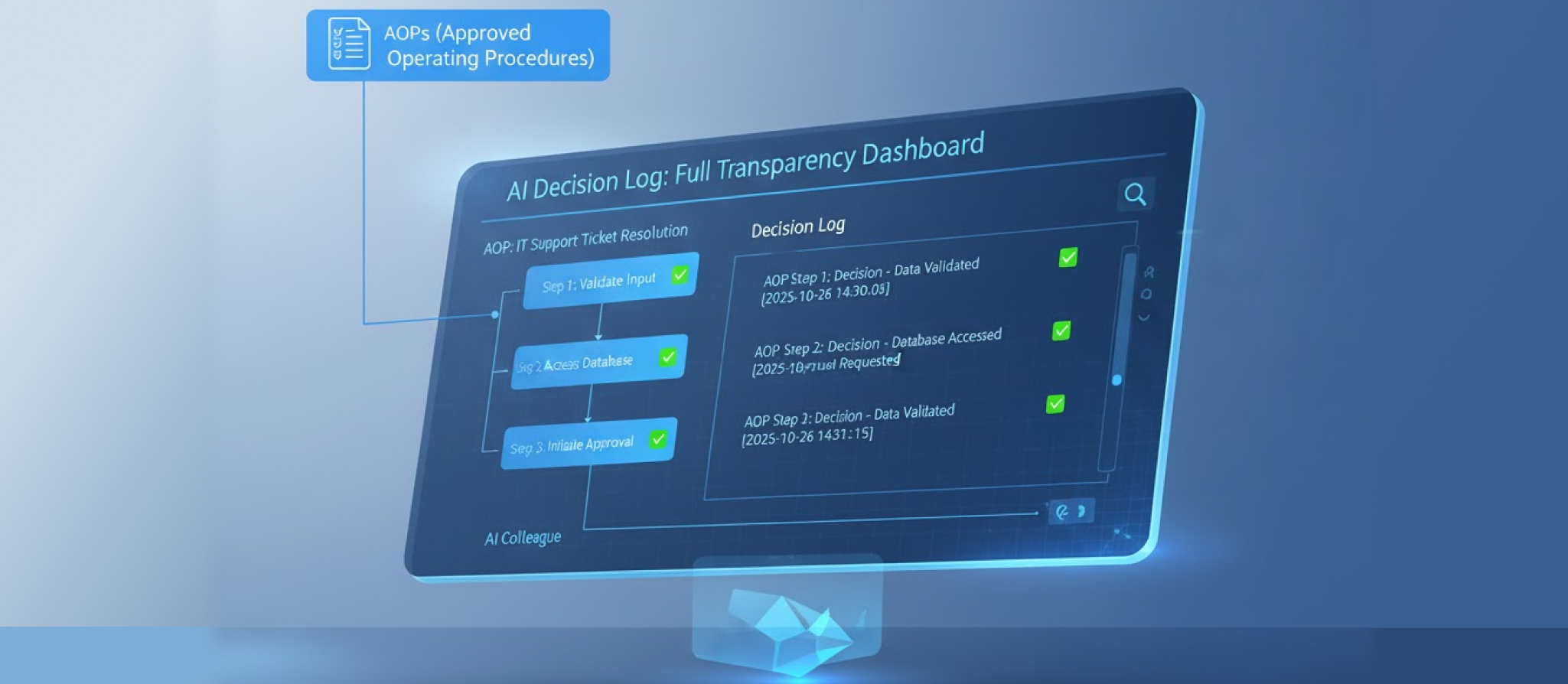

4. Full transparency dashboard: a complete decision log

Every reasoning step, decision checkpoint, and alternative path considered by an AI Colleague is logged to a transparency dashboard. You can trace, post facto, how and why the agent arrived at its final action.

This traceability delivers explainability, auditability, and accountability. For compliance, audit, regulatory, or executive review, you don’t have to reverse-engineer or guess — you see the stepwise record.

Why this combination matters

- Enforcement + observability: Policy breaches are prevented by design, not merely flagged after the fact.

- Auditability at micro granularity: You can reconstruct decision flows, not just final outcomes.

- Enterprise sovereignty: Customers retain control over rules and constraints, not just black-box defaults.

- Scalability of compliance: Because governance is built into every agent instance, new rules or regulations can be applied without a wholesale overhaul — the system scales.

Why CIOs, CTOs & Analysts Should View Leena AI as Best-in-Class

Governance as a differentiator

Many AI vendors offer transparency dashboards or governance modules as add-ons. Leena AI makes governance the architecture. You don’t just bolt on audit capabilities: every agent is born governed.

Continuous compliance, not periodic checks

Traditional compliance regimes often rely on post-hoc audits or spot checks. With Leena AI’s real-time checks and logs, compliance becomes continuous. Violations are blocked before they occur, and you always have trace logs ready.

Lower friction for regulation adaptation

When new regulations arrive (for example, in AI safety, data protection, or algorithmic accountability), Leena AI’s rule framework lets you inject or update guardrails and policies at scale across your agent fleet. You don’t rebuild systems — you update logic.

High explainability for oversight and trust

Executives, auditors, or regulators asking “why did the system do that?” get an answer — a full decision trace. You don’t have to expose your internal model internals; you expose the reasoning path that led to each action.

Alignment with evolving governance norms

By embedding trust, transparency, and enforcement at the core, Leena AI anticipates where the industry is going — meeting emerging governance expectations before they become mandatory. Gartner’s forecast that AI governance will be required under all sovereign regulations by 2027 suggests that governance-ready systems have a competitive edge. (CDO Magazine)

Conclusion

As enterprises move from experimenting with generative AI to deploying autonomous AI Colleagues at scale, transparency and governance will determine who thrives and who falters. The future of Agentic AI will belong to those who can prove control, accountability, and explainability, not just promise them. At Leena AI, we’ve embedded those principles into the foundation of every AI Colleague we build. With fact-checking layers, policy guardrails, enterprise-level customization, and full decision traceability, Leena AI gives organizations the confidence to scale automation responsibly. Because true intelligence in the enterprise isn’t just about what AI can do, it’s about how clearly you can see, trust, and govern what it does.