Introduction

You’ve seen the power of AI firsthand. Perhaps you’ve experimented with large language models (LLMs) to summarize support tickets or draft internal communications. While these pilot projects show incredible promise, they also reveal a critical challenge. How do you take a brilliant AI proof-of-concept and turn it into a secure, reliable, and cost-effective system that serves your entire organization? The answer is a disciplined approach called LLMops. This framework is the bridge from exciting AI experiments to enterprise-grade AI solutions that deliver real business value.

This guide will walk you through everything you need to know about LLMops. We will cover what it is, why it is essential for your organization, and how to implement it successfully. By understanding LLMops, you can confidently lead your company’s AI transformation, ensuring your investments are scalable, secure, and smart.

Understanding the Core of LLMops

So, what exactly is this operational framework? Think of it as the complete lifecycle management for large language models. It is a set of practices, tools, and principles designed to deploy, monitor, and maintain LLMs in a production environment efficiently and reliably. It is not just about the technology itself; it is about creating a streamlined process that ensures your AI models perform consistently and continue to improve over time.

What Is LLMOps?

At its heart, LLMops is the operational backbone for your company’s AI initiatives. Imagine you are building a factory. You would not just focus on designing a great product; you would also need an assembly line, quality control, supply chain management, and a maintenance schedule. Similarly, LLMops provides the assembly line for AI. It covers everything from preparing the data and training the model to deploying it for employees to use and then continuously monitoring its performance to catch any issues. This structured approach ensures that your powerful language models are not just a science project but a dependable business tool.

The Strategic Importance of LLMOps

For any large organization, moving from a single AI model to an army of specialized AI agents requires a robust operational strategy. Without it, you risk facing inconsistent performance, spiraling costs, and major security vulnerabilities. A solid LLMops foundation is what separates a successful AI program from a failed one.

Why LLMOps Matters for Organization-Scale LLM Deployment

When you deploy an AI model to handle thousands of employee IT or HR tickets daily, the stakes are high. LLMops matters because it directly addresses the biggest challenges of using AI at scale. Firstly, it ensures reliability. A proper LLMops framework includes rigorous testing and monitoring, so you can trust that your AI will provide accurate and helpful responses every time. Secondly, it manages costs. By optimizing how models are run, you can significantly reduce the expensive computing resources they require. Finally, it ensures your AI systems are secure and compliant with data privacy regulations, which is a top priority for any enterprise leader.

Building Your LLMops Framework

A successful LLMops strategy is built on several key pillars. Each component plays a vital role in creating a seamless pipeline from model development to real-world application. Understanding these parts helps you see how they work together to create a powerful and efficient system.

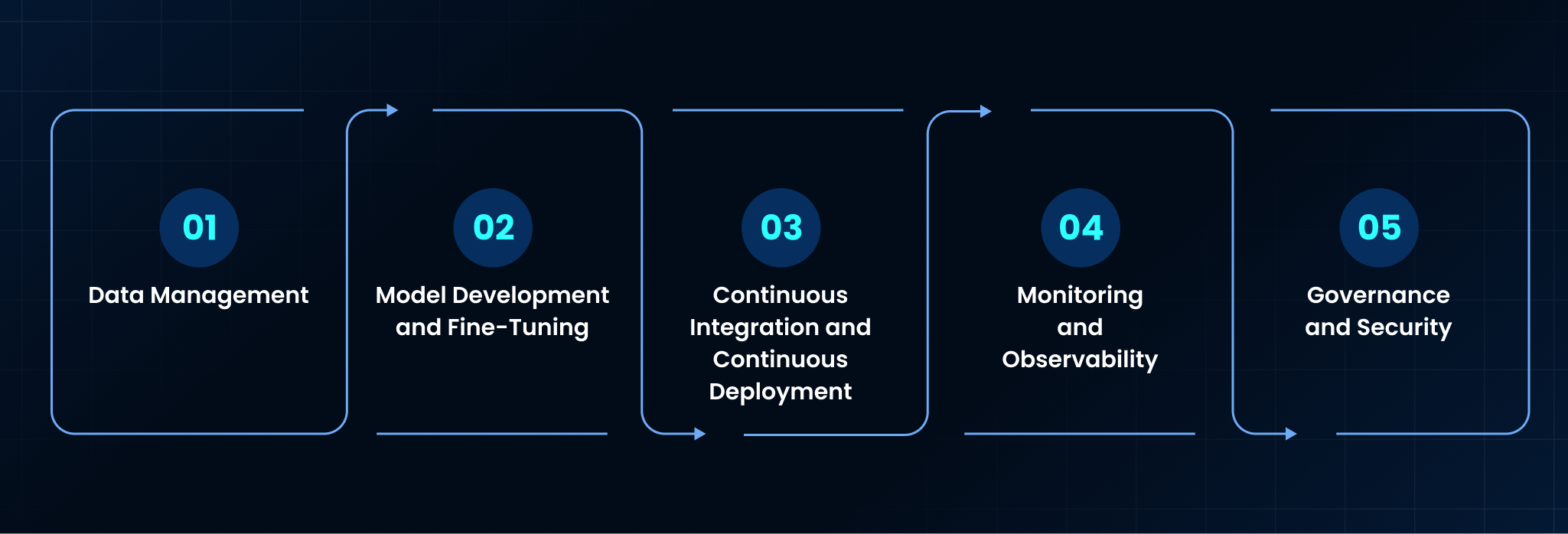

Key Components of an LLMOps Framework

An effective LLMops framework typically includes a few core components. Data Management is the starting point, involving the collection, cleaning, and preparation of your company’s unique data to train the models. Next is Model Development and Fine-Tuning, where general-purpose LLMs are customized to understand your specific business context, like your internal IT policies.

Following that, Continuous Integration and Continuous Deployment (CI/CD) automates the process of testing and releasing new model versions without disrupting service. Monitoring and Observability is another critical piece; it involves tracking the model’s performance, accuracy, and costs in real-time. Lastly, Governance and Security provides the rules and safeguards to ensure data privacy and ethical AI use. Together, these components create a cycle of continuous improvement.

How LLMops Differs from Traditional MLOps

You might be familiar with MLOps, which is the operational framework for traditional machine learning models. While they share similar goals, LLMops has some unique challenges that require a different approach. The sheer size and complexity of large language models introduce new hurdles that traditional methods were not designed to handle.

LLMOps vs. Traditional MLOps

The key difference lies in the nature of the models. Traditional machine learning models are often smaller and trained on structured data, like spreadsheets. In contrast, LLMs are massive and work with unstructured text. Consequently, LLMops places a much greater emphasis on managing the huge datasets required for fine-tuning. It also focuses heavily on prompt engineering, which is the art of crafting the right questions to get the best answers from the model. Furthermore, the ethical considerations and potential for generating harmful content are much more pronounced with LLMs, making robust governance a central part of the LLMops framework.

Implementing LLMops Successfully

Adopting an LLMops framework does not have to be an overwhelming task. By following a clear set of best practices, you can build a strong foundation for your AI initiatives. This structured implementation ensures that you avoid common pitfalls and maximize your return on investment from the start.

Best Practices for LLMOps Implementation

To get the most out of your LLMops strategy, start with a clear definition of the business problem you want to solve. Do not deploy AI for its own sake; align it with a specific goal, like reducing ticket resolution times. Second, build a cross-functional team that includes data scientists, software engineers, and business stakeholders. Collaboration is key. Third, invest in automation. Automating the testing and deployment pipelines will save time and reduce human error. Finally, establish a continuous feedback loop. Use input from your employees and performance data to constantly refine and improve your models.

Finding the Right LLMops Solution

The market for AI tools is growing rapidly. Choosing the right platforms and tools is crucial for building an effective LLMops pipeline. The ideal solution should support the entire model lifecycle, from development to production monitoring.

Tools and Platforms Supporting LLMOps

A variety of tools are available to support your LLMops journey. Cloud providers like AWS, Google Cloud, and Microsoft Azure offer comprehensive suites for managing AI models. There are also specialized platforms like Databricks and NVIDIA AI Enterprise that provide powerful tools for training and deploying LLMs. For monitoring, tools like Arize AI and WhyLabs help you track model performance and detect issues. The key is to choose a set of tools that integrate well with your existing technology stack and meet your specific needs for scale, security, and governance.

Ensuring Responsible AI with LLMops

With great power comes great responsibility. As you deploy powerful AI models across your organization, establishing strong governance and ethical guidelines is essential. A well-designed LLMops framework provides the necessary controls to ensure your AI is used responsibly.

Governance, Ethics, and Reliability in LLMOps

A core function of LLMops is to embed governance directly into the AI lifecycle. This includes implementing access controls to protect sensitive data and creating audit trails to track model behavior. It also involves setting up automated checks to detect and filter out biased or inappropriate responses. By building these safeguards into your operational process, you can ensure that your AI systems are not only effective but also fair, transparent, and aligned with your company’s values. This proactive approach to governance builds trust with both employees and customers.

Leena AI: Your Partner in Enterprise LLMops

Building a robust LLMops practice from scratch can be a complex and resource-intensive endeavor. That is where a specialized partner can make all the difference. At Leena AI, we have built our entire platform around the principles of enterprise-grade LLMops to deliver secure, reliable, and intelligent AI agents.

How Leena AI Enhances LLMOps Capabilities

Leena AI’s Agentic AI platform provides a complete, end-to-end solution designed specifically for enterprise needs. We handle the complexities of LLMops so you do not have to. Our platform comes with pre-built integrations for your core business systems, from Workday for HR to ServiceNow for IT. We manage the entire lifecycle of our AI agents, from fine-tuning them on your company’s knowledge base to continuously monitoring their performance and security.

For example, when an employee asks our AI agent to reset a password, we ensure the request is handled securely and efficiently by integrating with your identity management tools. This means you get the benefits of a powerful, custom-trained AI solution without the overhead of building and maintaining the underlying LLMops infrastructure. With Leena AI, you can deploy intelligent automation faster and with greater confidence, allowing your teams to focus on strategic initiatives instead of routine support tasks.

Frequently Asked Questions about LLMops

1. What is the main goal of LLMops?

The primary goal of LLMops is to operationalize large language models in a reliable, scalable, and cost-effective way. It aims to bridge the gap between AI model development and its successful deployment in a live business environment.

2. How does LLMops help control AI costs?

LLMops helps control costs by optimizing the entire AI lifecycle. This includes using resources more efficiently during model training, choosing the right-sized models for specific tasks, and continuously monitoring inference costs to prevent budget overruns.

3. Is LLMops only for large tech companies?

No, any organization looking to deploy LLMs in a production environment can benefit from LLMops. While large companies have led the way, the principles of LLMops are scalable and can be adapted to businesses of all sizes looking to use AI responsibly.

4. What are the security risks that LLMops helps mitigate?

LLMops addresses several security risks, including data leakage by ensuring PII is properly handled, protecting models from adversarial attacks, and establishing clear access controls. It provides the framework for secure AI operations.

5. How can I get started with LLMops in my organization?

A great way to start is by identifying a high-value, low-risk use case, such as an internal IT helpdesk bot. Begin with a small-scale pilot project, establish clear performance metrics, and build your LLMops practices incrementally as you learn and scale.

6. Does LLMops replace the need for data scientists?

Not at all. LLMops is a collaborative practice that empowers data scientists by automating many of the manual, operational tasks. This frees them up to focus on more strategic work, like developing new models and improving existing ones.

7. How does Leena AI simplify the LLMops process for enterprises?

Leena AI provides a managed platform that handles the complexities of LLMops for you. We offer a pre-built, secure, and scalable environment for deploying AI agents, which means you can get the benefits of advanced AI without needing a dedicated in-house LLMops team.