SLM vs LLM: Sweating Legacy Assets for Enterprise AI

The “GPU Waitlist” Failure Scenario

It is Q1 2026. Your CEO demands that every internal helpdesk—IT, HR, and Finance—be powered by “Agentic AI” immediately. The mandate is to reduce ticket volume by 40% before the fiscal year ends.

You have the budget for the software, but you hit a hard wall on infrastructure. The lead time for enterprise-grade Graphics Processing Units (GPUs) is still 18 weeks. Your cloud provider has surged the price of dedicated GPU instances by 35% due to supply constraints. You are effectively grounded.

Meanwhile, you have three racks of “end-of-life” servers in your New Jersey data center. These are standard dual-socket CPU machines purchased in 2021, fully depreciated, and scheduled for the shredder.

The failure here is not a lack of compute; it is a lack of asset strategy. By assuming that all language models require GPUs, you have left massive computational capacity on the floor. The operational fix is to shift your strategy from “One Giant Model” to “Many Small Models,” allowing you to run sophisticated AI on the hardware you already own.

SLM vs LLM — The Hardware Reality

To understand the slm vs llm trade-off, you must look at the “parameters”—the internal variables the model uses to think.

- Large Language Models (LLM): Typically 70 billion to 1 trillion parameters. They require massive memory bandwidth and parallel processing (GPUs) to run.

- Small Language Models (SLM): Typically 2 billion to 8 billion parameters. They are optimized to run on standard Central Processing Units (CPUs) and consumer-grade hardware.

Operational Component: Quantization & Containerization

You do not need a NVIDIA H100 to reset a password. You can run a highly optimized 7-billion parameter small language model on a standard Intel Xeon processor if you “quantize” it. Quantization reduces the precision of the model from 16-bit to 4-bit integers.

Steps to Sweat Assets:

- Audit CPU Inventory: Identify servers with modern instruction sets (AVX-512) and at least 64GB of RAM.

- Quantize Models: Convert open-source SLMs (like Llama-3-8B or Mistral) into GGUF format (a file format optimized for CPU inference).

- Deploy via Docker: Containerize the model with a lightweight runtime (like llama.cpp or vLLM) that runs on CPU threads, not CUDA cores.

Dependencies:

- High-speed RAM (DDR4/DDR5) is more critical than CPU clock speed for inference.

- Container orchestration (Kubernetes) to manage the fleet of CPU pods.

Failure Points:

- Memory Bandwidth Bottleneck: If the CPU cannot feed data to the cores fast enough, the token generation speed drops below 10 tokens per second, making the chat feel “laggy” to the user.

SLM vs LLM — The Routing Logic

The core of this strategy is an intelligent “Gateway Router.” You cannot replace GPT-4 with a small model for everything. You need to route simple tasks to the cheap CPU servers and only send complex tasks to the expensive GPU cloud.

When asking what is a LLM in AI workflows, think of it as the “PhD Researcher.” When defining the meaning SLM, think of it as the “Smart Intern.” The intern is free and fast; the researcher is expensive and slow.

Routing Logic for Infrastructure Optimization

SLM vs LLM — Operational Risks & Governance

Deploying SLMs on legacy hardware introduces specific governance risks. Unlike a centralized cloud API, these models live inside your network perimeter, often on hardware that was not designed for high-availability AI.

Risk 1: The “Accuracy Drop” from Quantization

When you compress a model to run on a CPU, it gets “dumber.” A 4-bit quantized model might struggle with nuance.

- Mitigation: Do not use SLMs for decision-making (e.g., “Should we approve this loan?”). Use them for retrieval and formatting (e.g., “Find the loan policy”).

Risk 2: Version Control Sprawl

With one LLM API, everyone is on the same version. with 500 SLM containers, you might have HR running version 1.0 and Finance running version 3.2.

- Mitigation: Implement a strict “Model Registry” where SHA-256 hashes of the model weights are verified before a container is allowed to spin up.

Risk 3: Thermal Throttling

Running inference 24/7 on 5-year-old servers pushes thermal limits.

Mitigation: Cap the CPU utilization at 80% in the container configuration to prevent server shutdowns.

SLM vs LLM — Implementation Realities

When answering what is llm strategy in 2026, the answer is “hybrid.” You are not choosing one or the other; you are building a stack that uses both.

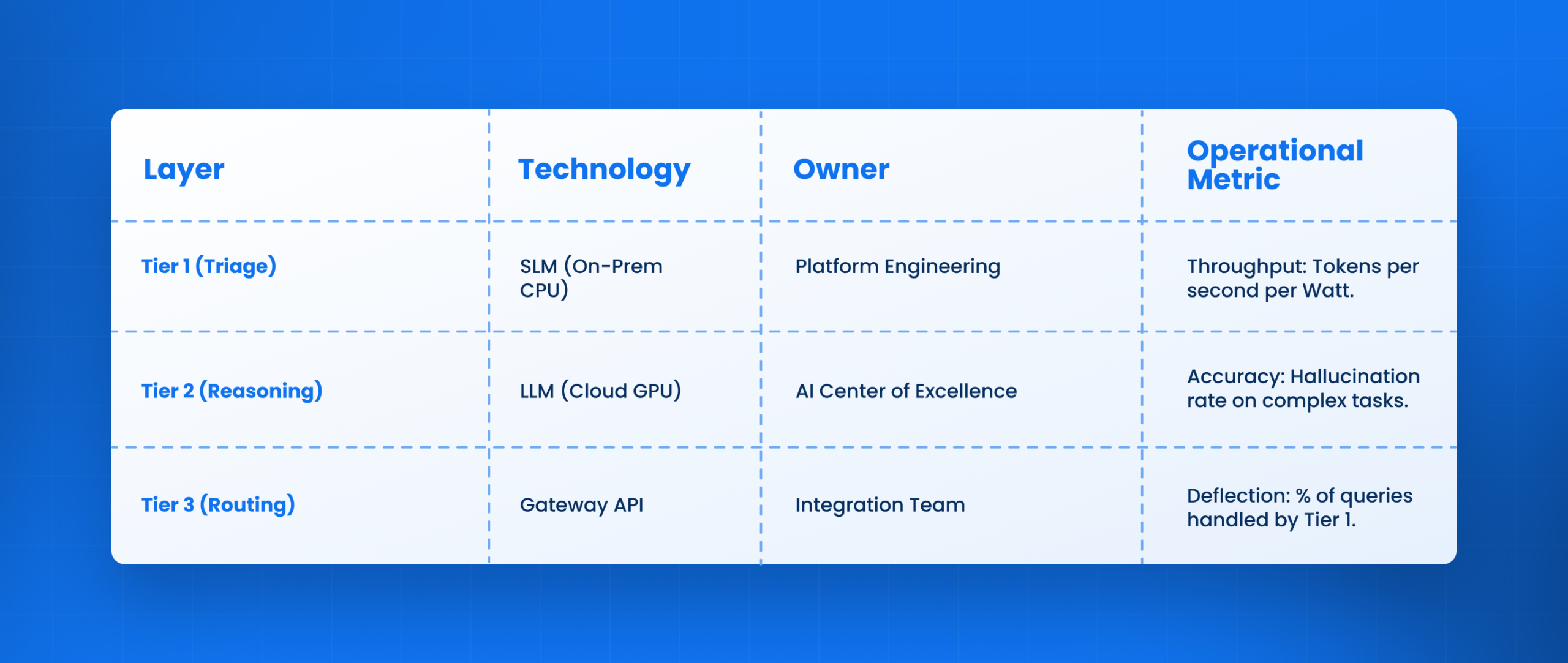

Ownership of the Hybrid Stack

Scale Failure Points:

- Latency Spikes: CPU inference is variable. If 50 users hit the same old server at once, response time can jump from 1 second to 10 seconds. You need aggressive load balancing.

- Cold Starts: Large models (even small ones) take time to load into RAM. You cannot spin these up “on demand”; they must be kept warm (loaded in memory), which consumes electricity even when idle.

How Leena AI Operationalizes This

At Leena AI, we act as the operational layer that makes this hybrid slm vs llm strategy possible without you writing the code. We do not force you to choose between cheap local models and smart cloud models; we orchestrate them.

Our Operational Approach:

- Intelligent Gateway: Our architecture automatically classifies every ticket intent. If it is a routine HR query, we route it to a highly optimized small language model that can run on your private infrastructure.

- Seamless Escalation: If the SLM struggles or lacks confidence, Leena AI automatically escalates the query to a more powerful LLM, ensuring the user never hits a “dead end.”

- Unified Governance: We provide a single dashboard to audit the performance of both model types, giving you visibility into how much money you are saving by deflecting traffic to the smaller models.

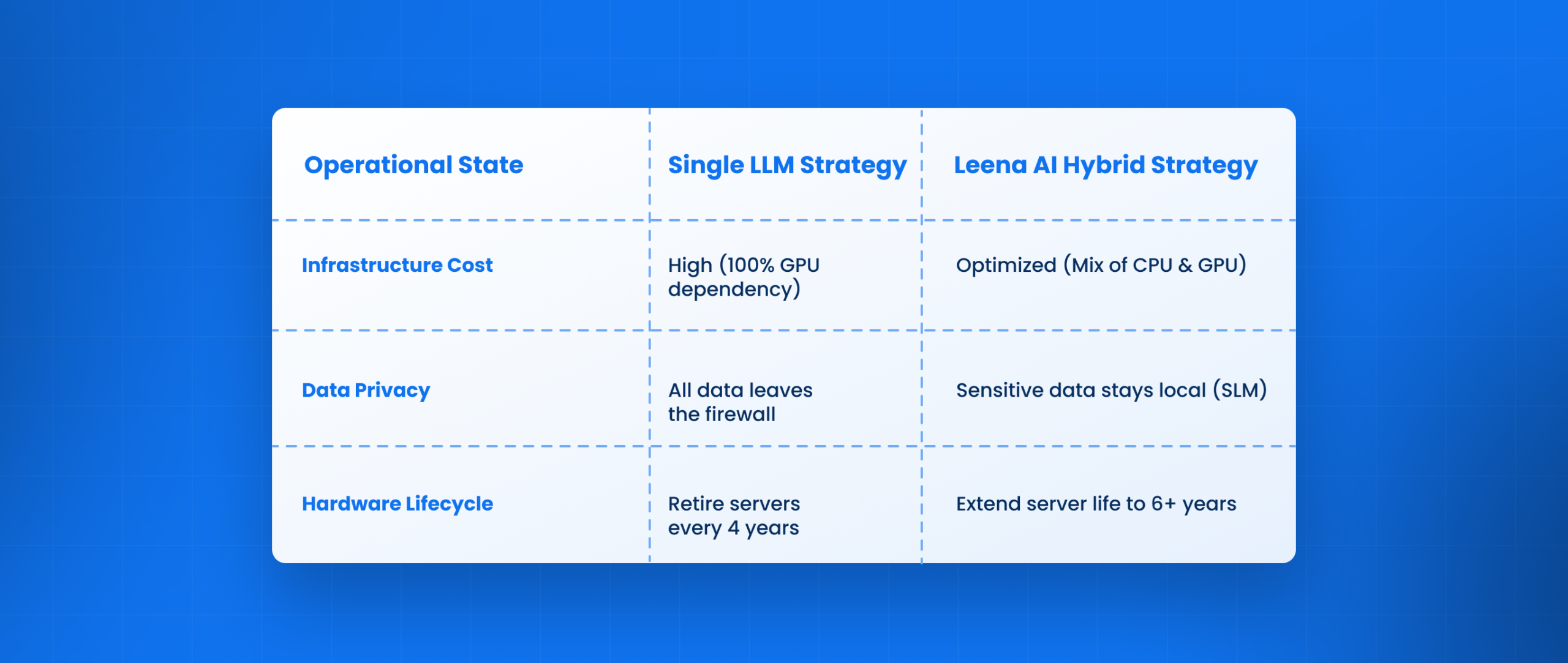

Operational State Before vs. After Leena AI

Conclusion: Executive Guardrails

The “GPU or Bust” mindset is a financial trap. In 2026, the most effective CIOs are those who treat compute as a tiered resource.

Monday Morning Next Steps:

- Inventory Audit: Send a script to identify every idle server with >64GB RAM.

- Pilot a “Local Bot”: Deploy an open-source SLM on a CPU server and test it against your top 100 helpdesk FAQs.

- Define the Router: Establish a clear rule set for what data is allowed to go to the cloud LLM versus what must stay on the local SLM.

Stop waiting for hardware delivery. You already have the compute you need; you just need to resize the model to fit the machine.

Frequently Asked Questions

What is the main difference in slm vs llm for enterprise?

SLMs (Small Language Models) are compact, cost-efficient, and can run on CPUs for specific tasks. LLMs (Large Language Models) are massive, expensive, and require GPUs for complex reasoning and general knowledge.

Can I really run a language model on an old CPU?

Yes. By using “quantization” (reducing data precision), you can run models like Llama-3-8B on standard Intel/AMD servers with acceptable speed (15-20 tokens per second) for internal tools.

What is a llm in AI governance compared to an SLM?

Governing an LLM involves monitoring API costs and data leakage to the cloud. Governing an SLM involves managing Docker containers, versioning files, and monitoring local server heat and memory usage.

When should we use an SLM?

Use SLMs for high-volume, low-complexity tasks like summarizing emails, retrieving policy documents, or basic Q&A where the answer is contained in the prompt.

What is the meaning slm in terms of accuracy?

SLMs are less “creative” and have less general world knowledge than LLMs. However, if you give them the right context (RAG), they are often just as accurate as LLMs for specific business queries.

Do SLMs eliminate the need for GPUs?

No. You still need GPUs for the “Teacher” models (LLMs) that handle complex reasoning. SLMs just allow you to offload the “grunt work,” reducing your GPU bill by 60-80%.