Agentic Solutions: Reducing the “Context Tax” with Semantic Caching

The “Billing Spike” Failure Scenario

It is the second week of deploying your new Agentic AI workforce. You have replaced simple chatbots with autonomous agents capable of multi-step reasoning. These agents don’t just answer questions; they plan, query APIs, reason, and execute tasks.

The Finance team is using a “Variance Analysis Agent” to explain budget deviations. On Monday morning, 500 Finance Managers ask slightly different versions of the same question: “Why is the marketing travel budget over by 15%?”

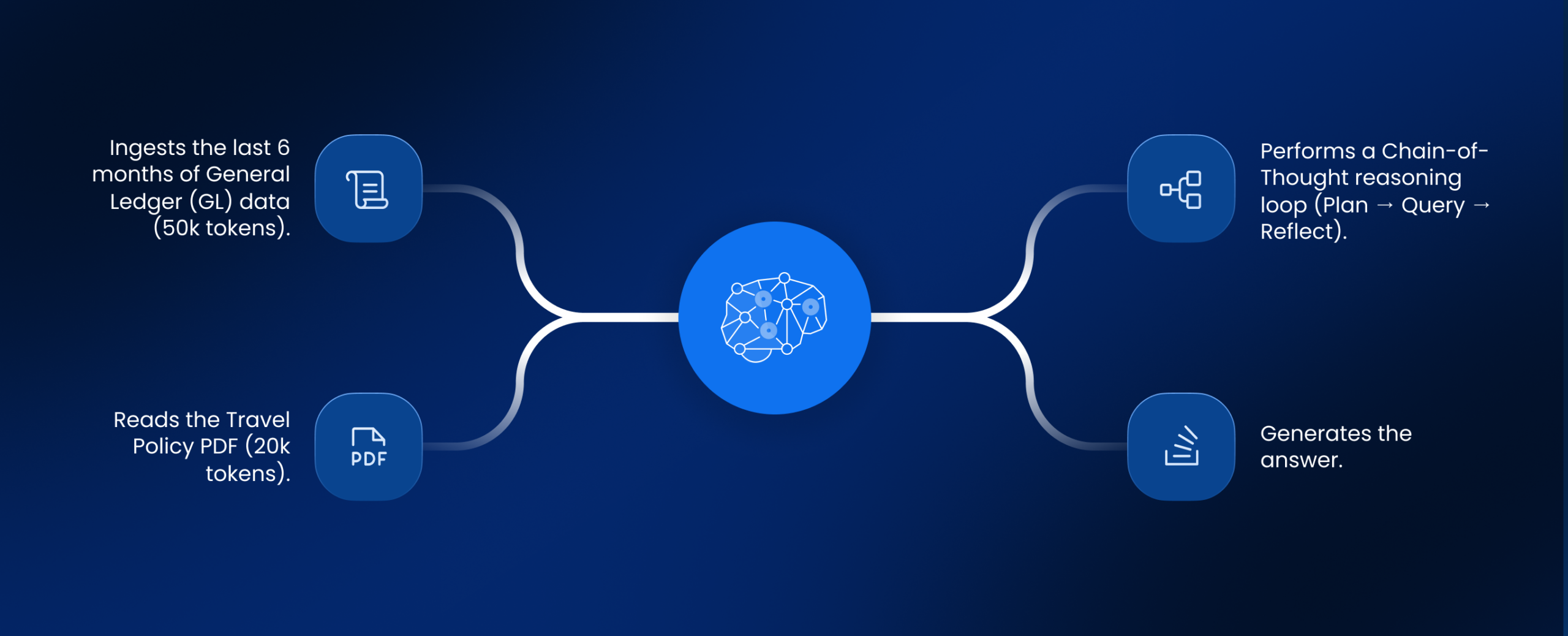

A standard chatbot would do a cheap database lookup. Your agentic solutions, however, are configured to “think.” For every single user, the agent:

- Ingests the last 6 months of General Ledger (GL) data (50k tokens).

- Reads the Travel Policy PDF (20k tokens).

- Performs a Chain-of-Thought reasoning loop (Plan → Query → Reflect).

- Generates the answer.

By noon, you receive an alert. You have burned through $15,000 in inference credits in four hours. The agent re-computed the exact same logic 500 times for 500 different people, treating every request as a novel problem. This is the “Context Tax” the operational cost of treating every interaction as unique when 80% of enterprise intent is repetitive.

Agentic Solutions — The Architecture of Semantic Caching

To make agentic AI viable at scale, you must implement a “Short-Circuit” layer. We call this Semantic Caching.

Unlike traditional caching (Redis/Memcached), which looks for exact text matches (e.g., “reset password” == “reset password”), semantic caching uses vector embeddings to match the intent of the request. If User A asks “Why is travel over budget?” and User B asks “Explain the variance in T&E,” the system recognizes they are the same question and serves the cached reasoning from User A, bypassing the expensive agent loop entirely.

Operational Component 1: The Vector Cache Layer

You cannot rely on the Large Language Model (LLM) to cache itself. You need a dedicated vector database sitting in front of your agent fleet.

The Workflow:

- Ingest: User submits a prompt.

- Embed: The system converts the prompt into a vector (a long list of numbers representing meaning).

- Search: The vector database scans for similar vectors from the last 24 hours.

- Score: It calculates a “Cosine Similarity” score (0 to 1).

- Decision:

- Score > 0.95: Cache Hit. Return stored answer immediately. (Cost: $0.001)

- Score < 0.95: Cache Miss. Trigger the full agentic loop. (Cost: $2.50)

Dependencies:

- A low-latency Vector Store (e.g., Pinecone, Milvus, or Redis Vector).

- An embedding model that matches your agent’s domain language.

Failure Points:

- Threshold Drift: Setting the similarity threshold too low (e.g., 0.80) causes the agent to return a “Travel Budget” answer when the user asked about “Training Budget,” because the vectors were mathematically close.

Agentic Solutions — Managing Cache Invalidation

The hardest part of agentic AI definition and strategy is not storing data; it is knowing when to delete it. In a static world, answers last forever. In the enterprise, data changes every minute.

If your “Inventory Agent” caches that we have 500 units of stock at 9:00 AM, and you sell 400 units at 9:15 AM, the cache is now “poisoned.” It will confidently tell the next user we have stock, leading to a failed order.

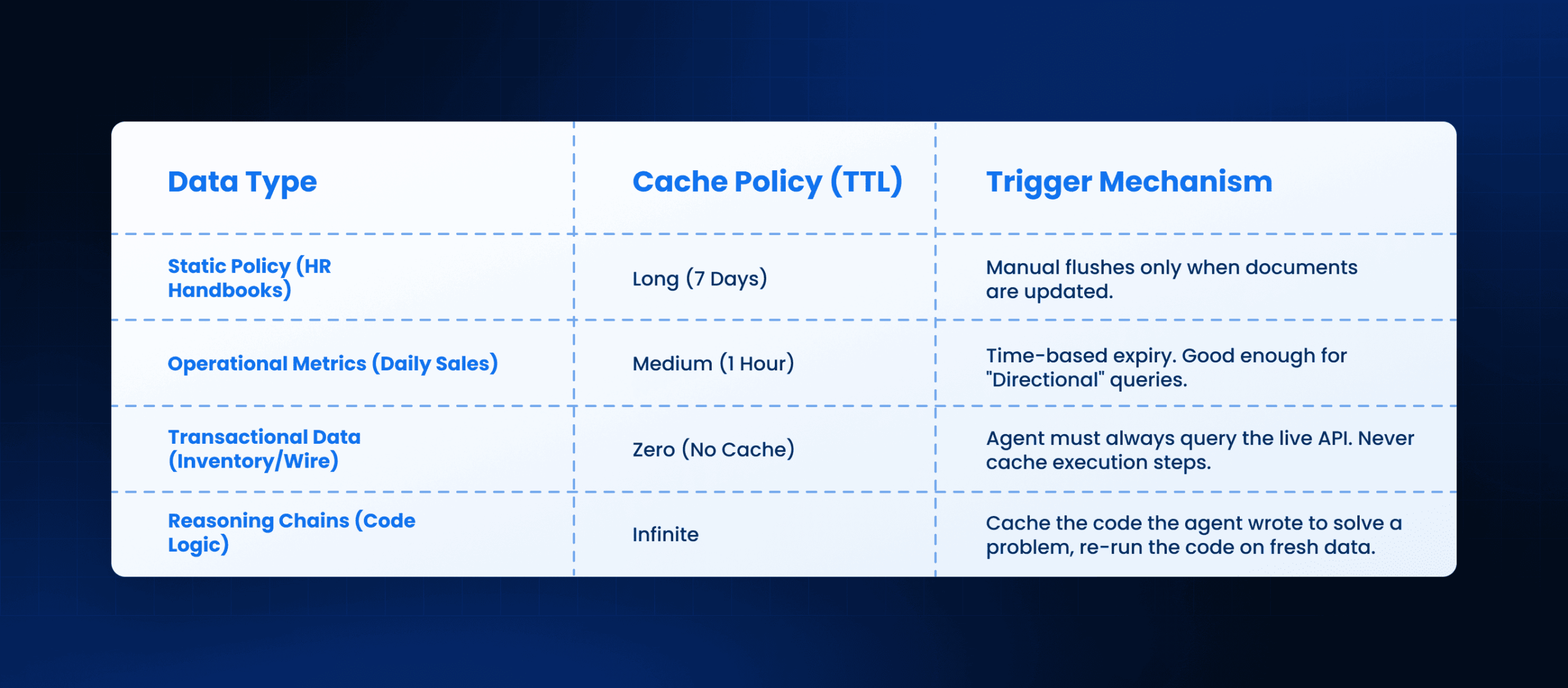

Strategies for Invalidation:

Agentic Solutions — The “Reasoning” vs. “Data” Split

A sophisticated agentic AI tool separates the logic from the result.

The “Code-Cache” Approach:

When an agent solves a complex problem (e.g., “Write a SQL query to find top sales reps in Q3”), it performs two expensive steps:

- Reasoning: figuring out the schema and writing the SQL.

- Execution: running the SQL against the database.

Optimization:

You should cache Step 1, but re-run Step 2.

- User A asks: “Top reps Q3.”

- Agent: Thinks hard, writes SQL. (Saves SQL to cache). executes SQL.

- User B asks: “Who sold the most in Q3?”

- System: Matches intent. Retrieves cached SQL. Executes SQL immediately.

This reduces the “thinking” cost (the most expensive part of agentic AI applications) while ensuring the data is 100% fresh.

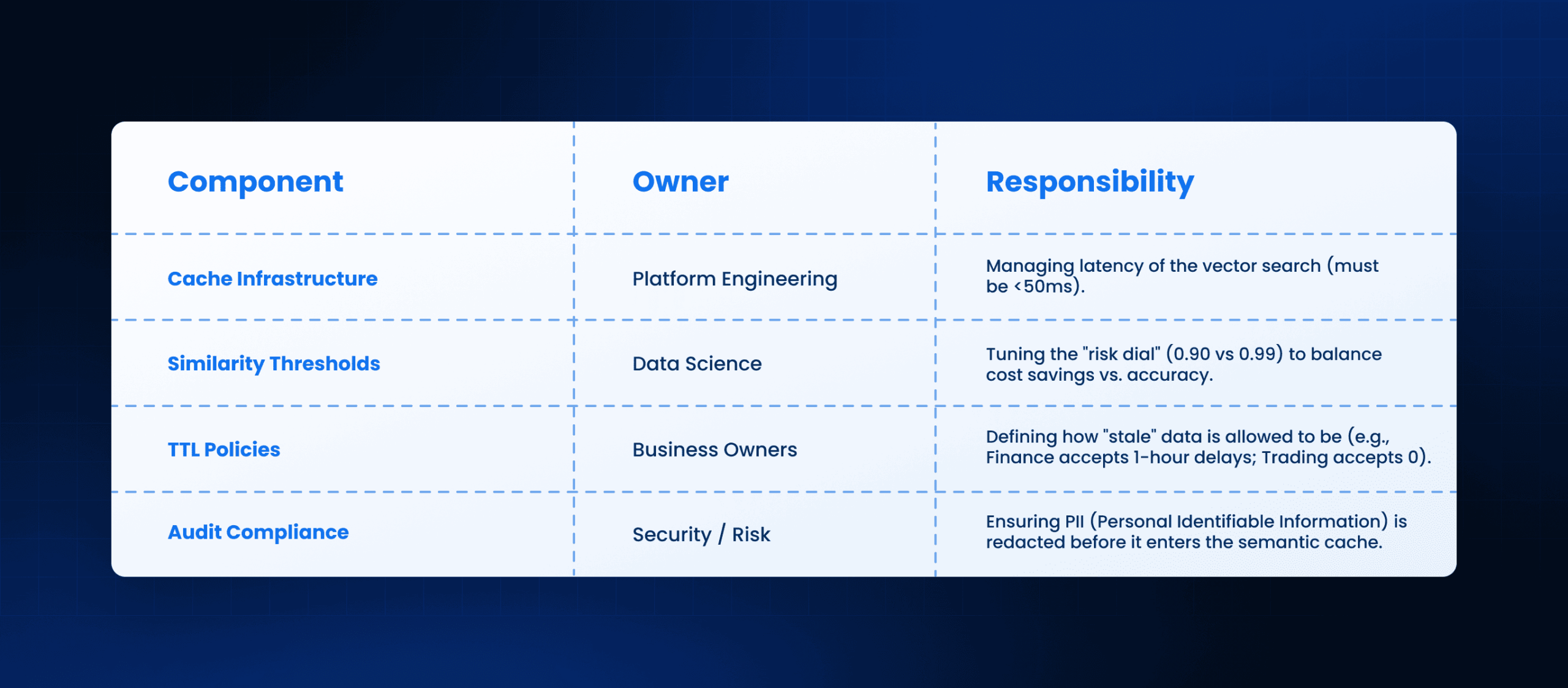

Agentic Solutions — Scaling Limits & Ownership

Who owns the cache? In a siloed enterprise, this becomes a governance war zone.

Ownership & Governance

How Leena AI Operationalizes This

At Leena AI, we build agentic solutions that are financially viable at enterprise scale. We do not just give you an LLM; we give you the orchestration layer that manages cost.

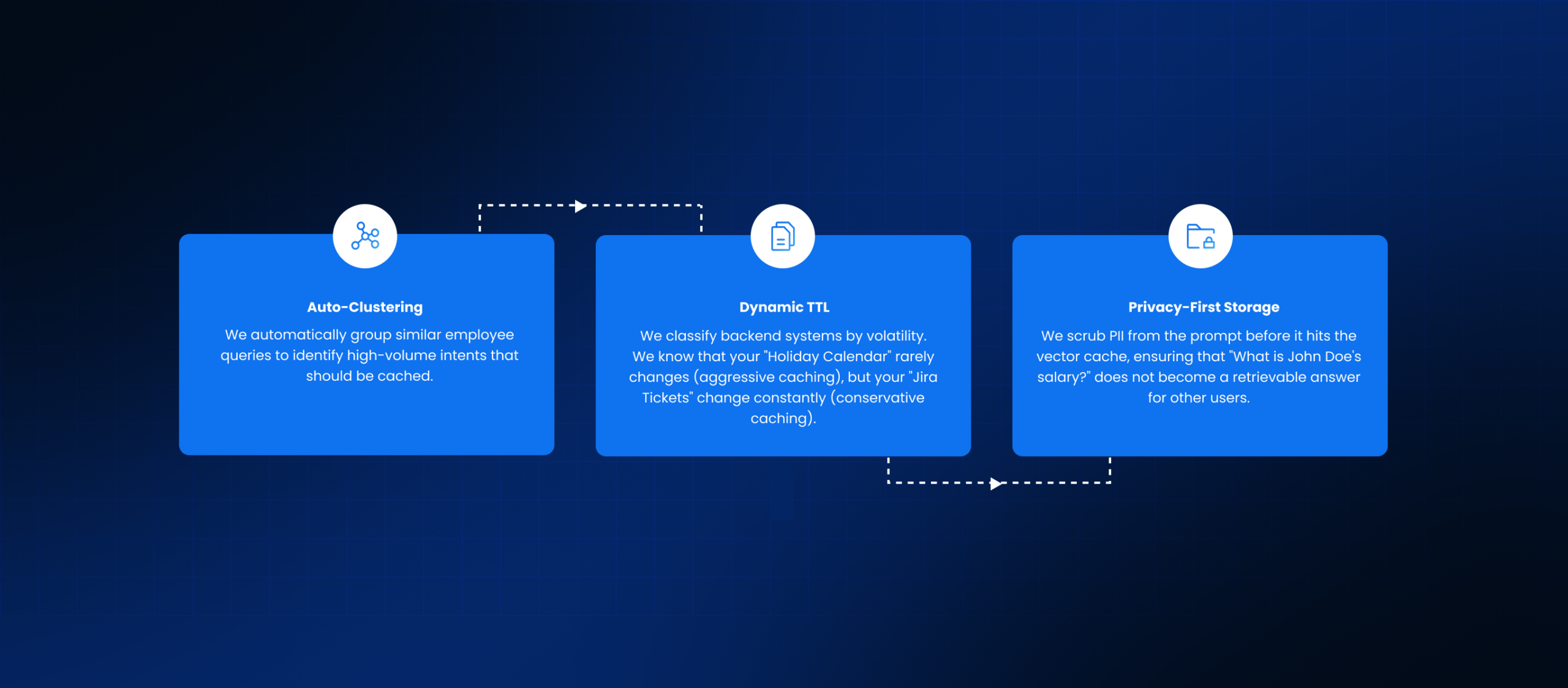

Our Semantic Caching Engine:

- Auto-Clustering: We automatically group similar employee queries to identify high-volume intents that should be cached.

- Dynamic TTL: We classify backend systems by volatility. We know that your “Holiday Calendar” rarely changes (aggressive caching), but your “Jira Tickets” change constantly (conservative caching).

- Privacy-First Storage: We scrub PII from the prompt before it hits the vector cache, ensuring that “What is John Doe’s salary?” does not become a retrievable answer for other users.

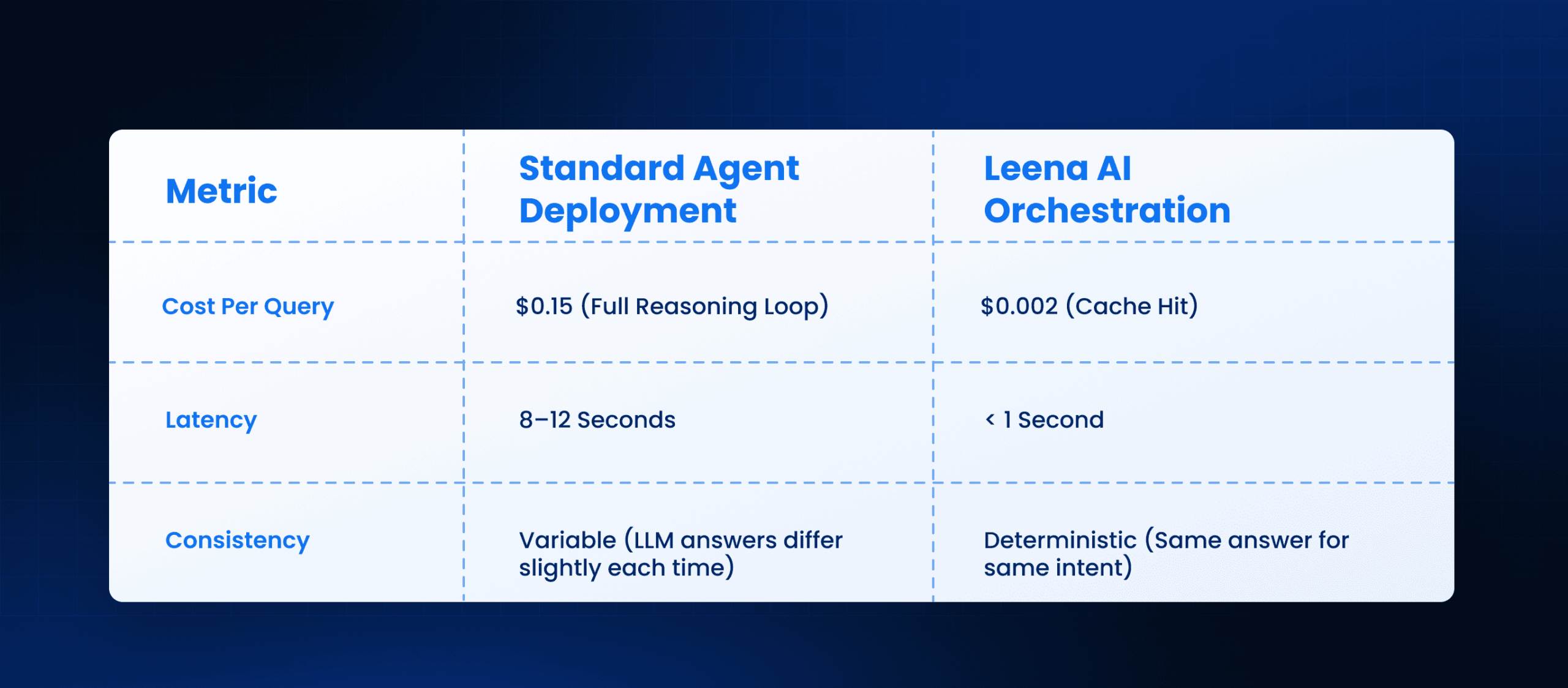

Operational State Comparison

Conclusion: Execution Outcomes

The difference between a science project and a production agentic solution is unit economics. If your agents cost $5.00 to answer a $0.50 question, you will be forced to shut them down.

Monday Morning Next Steps:

- Audit your Query Logs: Identify the top 10 questions being asked to your agents.

- Calculate the Waste: Multiply the volume of those top 10 questions by the token cost of your agent loop. That is your potential savings.

- Implement “Cache-First” Logic: Configure your orchestration layer to check a vector store before it ever calls the LLM.

Stop paying your agents to think about problems they have already solved.

Frequently Asked Questions

What is the difference between caching and semantic caching?

Standard caching requires an exact text match. Semantic caching understands that “I can’t login” and “Reset my password” are the same intent, allowing it to reuse the answer even if the phrasing differs.

Does semantic caching reduce the accuracy of agentic AI tools?

It can, if the similarity threshold is set too low. If the system assumes two different questions are the same, it will return a wrong answer. This is why tuning the threshold (e.g., keeping it above 0.95) is critical.

Can we cache the agent's actions (e.g. creating a ticket)?

No. Never cache side-effects. If an agent creates a Jira ticket for User A, you cannot serve that “Ticket Created” response to User B. You must always re-execute the action layer.

How much money does this actually save?

In high-volume internal helpdesks, we typically see a 40–60% reduction in token costs. The “Long Tail” of unique queries still costs money, but the “Head” of repetitive queries becomes nearly free.

What is the Context Tax in agentic AI?

It is the cost of re-sending massive amounts of background data (documents, history, logs) to the model for every single query. It is the primary reason why agentic AI examples often fail to reach production ROI.

Does Leena AI handle the vector database for us?

Yes. Our platform includes the managed vector infrastructure required to handle semantic caching, so you do not need to spin up separate Pinecone or Milvus instances.