AI in Manufacturing: Risks in Capturing Tribal Knowledge

The “Silver Tsunami” Failure Scenario

It is 2:00 AM on a Tuesday in a Tier 1 automotive stamping plant. The main hydraulic press, a critical asset installed in 1998, suffers a catastrophic pressure fault. The standard operating procedure (SOP) says to replace the main valve. The night shift maintenance team replaces the valve. The fault persists.

Production is halted. The cost of downtime is $22,000 per minute.

The Plant Manager calls the only person who knows how to fix this specific issue: a Senior Master Technician named Jim. Jim’s phone goes to voicemail. Jim retired three weeks ago.

What the SOP didn’t say—but what Jim knew—was that this specific machine has a sensor drift issue that mimics a valve failure. The fix is a 10-degree calibration tweak, not a part replacement. Jim knew this because he fixed it five years ago and “just remembered it.” He never wrote it down in the Computerized Maintenance Management System (CMMS) because the interface was clunky.

This is the failure of the “Silver Tsunami.” Critical intellectual property walks out the door every day. AI in manufacturing is the only scalable tool capable of capturing this unstructured, implicit knowledge, but deploying it introduces significant operational risks that technology leaders must manage.

AI in Manufacturing — The Data Ingestion Risk

The primary challenge in using artificial intelligence in manufacturing to capture tribal knowledge is the quality of the source data. You cannot train a model on empty logs.

Risk Description:

The “Empty Log” Paradox. Senior technicians are often the worst at documentation. They fix the problem quickly and close the ticket with a single word: “Fixed.” If you train an AI on historical maintenance logs, it learns nothing about the root cause or the method of repair.

Why This Happens at Scale:

- Speed Incentives: Technicians are incentivized on “Time to Repair,” not “Time to Document.”

- Interface Friction: Legacy maintenance software is difficult to use on mobile devices in greasy shop-floor environments.

- Tacit Knowledge: Technicians rely on sensory cues (sound, vibration, smell) that are difficult to articulate in text.

Early Warning Signals:

- Greater than 50% of closed maintenance tickets have fewer than 10 words in the “Resolution” field.

- AI for manufacturing pilots yield generic answers like “Check the manual” rather than specific diagnostic steps.

Mitigation Options:

- Voice-to-Text Capture: Equip technicians with wearable microphones to dictate notes while their hands are busy.

- Video Capture: Require a 30-second video clip of the repair for complex tickets.

- Interview Bots: Use an AI agent to proactively call technicians after a ticket is closed to ask specific debriefing questions.

AI in Manufacturing — Hallucination in Safety-Critical Contexts

When applying ai in industrial automation contexts, the cost of an error is physical safety, not just bad text.

Risk Description:

Model Hallucination. A generative model fills in gaps in its knowledge with plausible-sounding but factually incorrect instructions. For example, suggesting a bypass of a safety interlock to reset a machine because it saw that pattern in a different, non-safety-critical dataset.

Why This Happens at Scale:

- Data Sparsity: Unique, legacy machines have very little training data. The model over-generalizes from generic machinery data.

- Lack of Context: The model does not understand physics or safety regulations; it only understands statistical probability of word sequences.

Early Warning Signals:

- The AI suggests procedures that violate OSHA Lockout/Tagout (LOTO) protocols.

- Technicians report that the AI is “making things up” for older machine models.

Mitigation Options:

- RAG (Retrieval-Augmented Generation): Strict grounding where the AI must cite a specific page in the OEM manual or a verified past ticket for every claim.

- Human-in-the-Loop Verification: A “Master Technician” must review and digitally sign off on AI-generated troubleshooting guides before they are released to junior staff.

AI in Manufacturing — Risk & Ownership Map

Deploying automation in manufacturing knowledge transfer is a cross-functional responsibility. It fails when IT tries to own it alone without Operations.

|

Risk Scenario |

Technical Trigger |

Business Owner |

Operational Impact |

|

“Brain Drain” Acceleration |

Technicians refuse to share knowledge, fearing AI will replace them. |

HR & Plant Leadership |

High. Senior staff hoard knowledge; AI learns nothing; reliance on retirees increases. |

|

Incorrect Safety Advice |

AI hallucinates a repair step that ignores high-voltage warnings. |

EHS (Safety) Director |

Critical. Risk of electrocution or fire; immense liability; shutdown of AI program. |

|

Data Poisoning |

Inaccurate logs from junior staff dilute the high-quality insights from experts. |

Chief Data Officer |

Medium. The model becomes average, losing the “expert” edge needed for tough problems. |

|

Integration Failure |

AI cannot access the siloed data in the 20-year-old on-premise CMMS. |

CIO / CTO |

High. The AI has no historical memory and functions only as a generic chatbot. |

AI in Manufacturing — Governance & Validation

To safely leverage artificial intelligence in manufacturing, you must establish a governance framework that validates knowledge before it becomes standard operating procedure.

The “Trust but Verify” Protocol:

- Ingest: Capture voice notes, logs, and video.

- Transcribe & Structure: Convert unstructured data into a structured problem-solution format.

- Validate: A Subject Matter Expert (SME) reviews the extracted logic.

- Publish: The insight is added to the “Golden Knowledge Graph.”

AI in Industrial Automation — Liability & Insurance

If an ai in industrial automation tool advises a repair that causes damage, who is liable? The vendor? The enterprise? The technician who followed it?

- Guardrail: Clear policy stating that AI advice is “decision support,” not “instruction.” The human technician remains the final authority and responsible party.

Automation in Manufacturing — Cultural Resistance

The biggest barrier to automation in manufacturing knowledge capture is not technical; it is psychological. Senior workers often view knowledge hoarding as job security.

Strategy: Reframe the initiative. Do not call it “automating knowledge.” Call it “Legacy preservation.” Incentivize mentorship. Pay bonuses for every “Verified Insight” a senior technician contributes to the system.

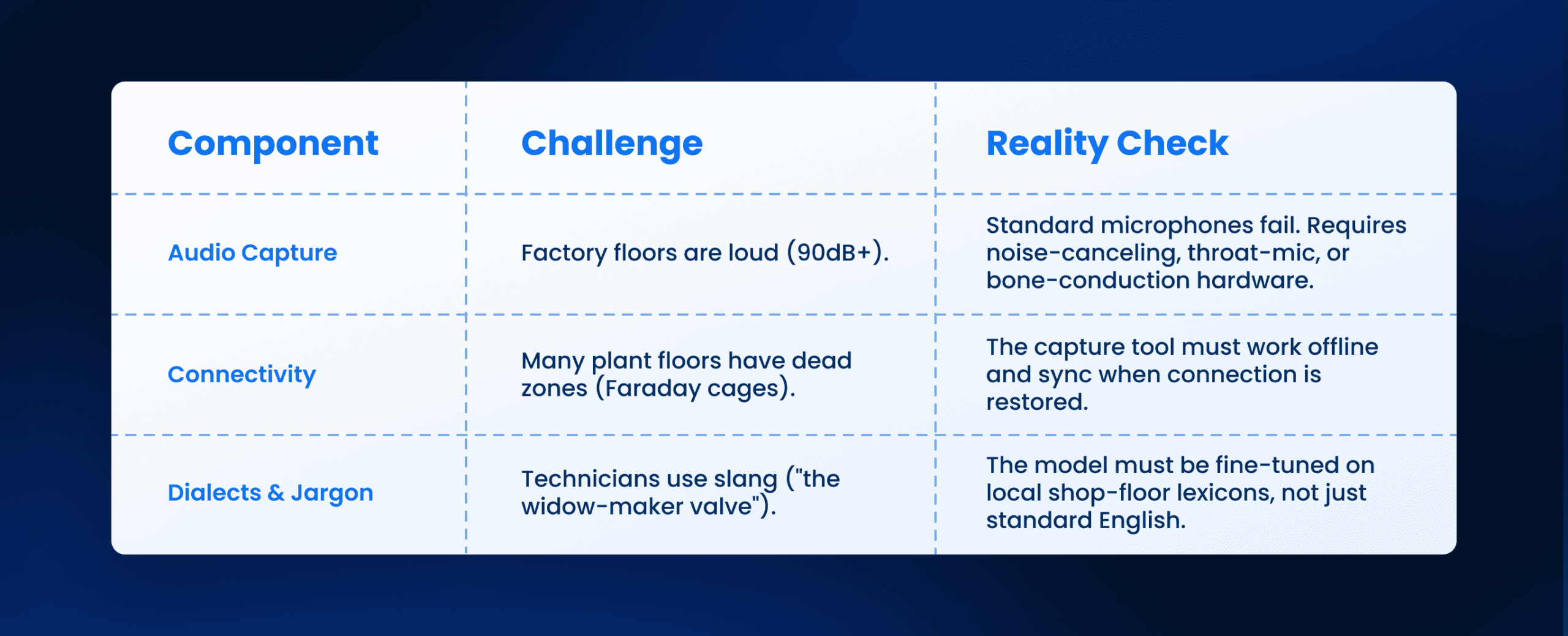

AI in Manufacturing — Implementation Realities

Implementation of AI in manufacturing for knowledge transfer is rarely a “plug and play” exercise. It requires dealing with messy, real-world constraints.

How Leena AI Designs for Governance

At Leena AI, we approach the problem of AI in manufacturing knowledge transfer with a “Safety First” architecture. We do not generate answers from a black box; we retrieve and synthesize verified data.

Our Operational Principles:

- Source Attribution: Every output generated by the system includes a direct link to the source log, manual, or voice note.

- Role-Based Access: Information regarding high-voltage or hazardous machinery is only accessible to technicians with the correct certification level stored in the HR system.

- Feedback Loops: We embed a simple “Thumps Up / Thumbs Down” mechanism for technicians to rate the accuracy of an answer, continuously tuning the relevance engine.

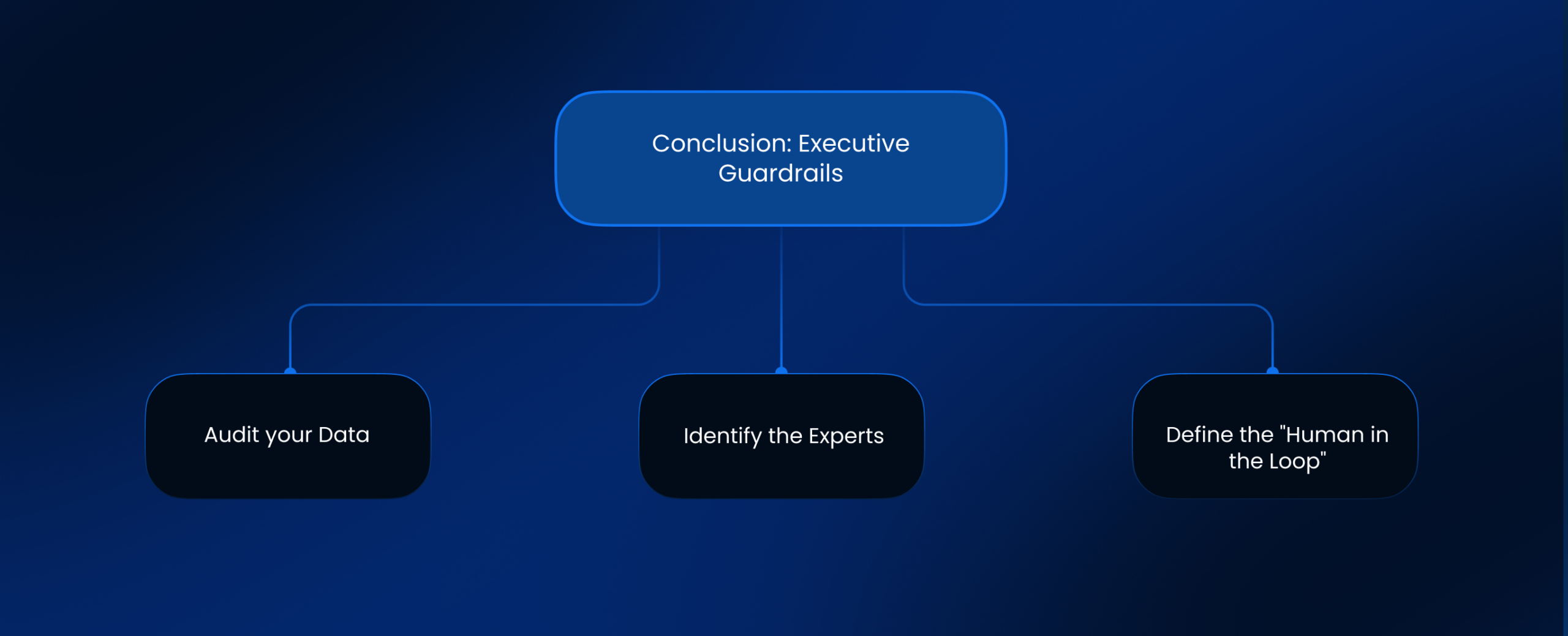

Conclusion: Executive Guardrails

The loss of tribal knowledge is an existential risk for US manufacturing. AI in manufacturing offers a solution, but it must be managed with rigorous discipline.

Monday Morning Decision Checklist:

- Audit your Data: Do you have logs worth training on, or are they empty?

- Identify the Experts: Who are the top 5 people whose retirement would cripple operations? Start capturing their knowledge today.

- Define the “Human in the Loop”: Never allow an AI agent to publish a safety procedure without a human signature.

Deploy ai for manufacturing not to replace your experts, but to immortalize their expertise before they walk out the door.

Frequently Asked Questions

How do we prevent the AI from giving dangerous advice?

Strict “Grounding” is required. The AI in manufacturing system should be configured to only answer based on uploaded manuals and verified logs. If the answer is not in the data, it must answer “I do not know,” rather than guessing.

Can AI really understand messy technician notes?

Yes. Modern Large Language Models (LLMs) are exceptionally good at deciphering typos, slang, and shorthand, provided they are fine-tuned on a sample of your specific industry data.

Will senior technicians refuse to use this?

Resistance is common. Success depends on UX. If the tool is easier to use than the old keyboard-based logging system (e.g., voice-based), adoption rates increase.

How do we handle different languages on the shop floor?

Artificial intelligence in manufacturing tools usually support real-time translation. A Spanish-speaking technician can dictate a note in Spanish, and it can be queried in English by a supervisor.

Is this legal from a labor union perspective?

You must consult with union representatives. Typically, if the tool is positioned as “safety and training support” rather than “performance monitoring,” it is accepted. Transparency is key.

What is the ROI timeline?

The ROI is often realized in the avoidance of a single major outage. If the system prevents one 4-hour shutdown of a critical line, it often pays for the annual implementation cost immediately.